Cathy Gunna, Linda Creanorb, Neil Lentc, and Keith Smythd

The University of Auckland, New Zealanda; Glasgow Caledonian University, UKb; The University of Edinburgh, UKc; Edinburgh Napier University, UKd

The influence of academic development work is notoriously hard to measure in ways that fairly represent the links between input and outcomes and are manageable for reporting purposes (Bamber, 2013). Shifting institutional and external pressures, the evolving and multi-faceted nature of the work and a complex set of factors driving the integration of new technologies in teaching and learning are all complicating factors. A conceptual shift from quality assurance to quality enhancement in teaching (Saunders, 2009), and from evaluation of impact to evidence of value (Bamber, 2013), adds to the task in a dynamic field driven by the interplay of context and practice. Gibbs (2013) reflected on how academic development work has evolved over the past 40 years, demanding responsiveness and creativity from institutional managers and academic development professionals alike. Unfortunately, these attributes are not always shared with the institutional settings they operate within (Gunn, 2010), making the task of fair representation even harder. The term ‘wicked problem’ is a fitting description for the challenge, since the solution depends on how the problem is framed; stakeholders have radically different ways of understanding the problem; the constraints the problem is subject to and the resources needed to solve it change over time, and the problem is one that is never solved definitively (Kolko, 2012).

Quantitative evaluation is a common approach that suits some purposes and types of academic development activity. Numbers of attendees at workshops and courses, teaching enhancement projects undertaken, pass rates for accredited courses, ratings on feedback forms and statistics for learning management systems (LMS) use provide a broad outline for reporting to management committees. However, these measures are unsuited to the embedded activities that have become common in recent years. Reporting by numbers obscures key influences such as quality of interaction or synergy from collegial relationships, so the real drivers and processes of change are poorly understood (Stefani, 2010). Qualitative measures require more effort, but the rich descriptions of influences and outcomes they can produce justify investment. Educational design research, described by McKenney and Reeves (2012), is one example of a suitable and systematic approach. Beginning with theory-driven design, studies progress through collaborative action research cycles with evaluation linked to learning enhancement (or capacity building) goals. Studies are often long term rather than snapshots at a point in time and examine complex relationships in which academic development is just one component. The contribution of any element – for example, learning design, discipline or e-learning expertise – is hard to identify and cannot be easily translated into a key performance indicator, which is an increasingly preferred style of reporting. Academic development centres need evidence to demonstrate their expertise adds value and is a productive way to steer faculty towards achievement of institutional goals for teaching, learning and academic citizenship. This ‘evidence of value’, as Bamber (2013) calls it, shows why different approaches succeed or fail and adds to a growing body of knowledge on academic development. From a management perspective, evidence must show that investment is justified. Equally importantly, it can demonstrate what is involved in effecting the changes mandated by national or institutional strategy and implemented through the agency of specialist academic development centres. One priority is for academic developers to find meaningful ways to evaluate less visible aspects of their work. Another is to try to shift institutional mindsets to seek evidence of transformation rather than the convenience of numbers. The simplicity of standard reports is appealing but perpetuates the risk of services being set up or restructured without reference to critical elements or to the real value of different activities.

This article outlines the context and role of academic development, drawing on the authors’ experience and key literature sources. It presents case study initiatives that aimed to represent academic development as a change agent at sector, institution, programme and practice levels. The initiatives are creatively designed academic development activities aligned with strategic aims. The challenge now is to devise equally creative ways to gather and present evidence of their influence and value.

Many academic development centres operate in the context of competing pressures to increase scale and professionalise university teaching. Shifting patterns of competition, demand for flexible study options and uses of technology for collaboration and coursework are also common themes. Within this context, two broad aims are to:

Many universities articulate national initiatives and trends through institutional teaching and learning strategies. Specialist academic development centres contribute to shaping the agenda and take a large share in responsibility for implementation. Discussing the work these centres do, Gosling (2008) noted that, to be effective, they promote a range of activities, including bottom-up and top-down approaches and faculty-based and central initiatives. Most retain a degree of freedom to interpret plans as experience and local circumstances dictate. This broad remit informs the discussion that follows.

Common activities supported by academic development centres include: administration of grants and awards; fellowships; showcases; workshops and courses of various lengths; course and curriculum (re)development projects; dissemination initiatives; production and customization of e-learning tools; teaching innovation, evaluation and support services; learning in communities of professional practice; consultations; and collaboration on scholarship of teaching and learning or educational design research. While it is easy to gather, for example, attendance statistics and usage and pass rates, more meaningful evaluation is both complex and problematic.

At national and institutional levels, pursuit of strategic objectives may change culture, build capacity, foster and disseminate innovations and transform teaching and learning to reflect quality enhancement goals. The drivers of this kind of change are complex and difficult to evaluate, even over longer timeframes. In the short to medium term – the current norm for reporting cycles – it is even harder. At practice level, detailed evaluation is also problematic. It requires qualitative data that is not usually collected for various – often practical, sometimes political – reasons and involves causal relationships too complex to untangle; for example, the influence of learning design expertise or the affordances of particular technologies in a teaching and learning context. If the broad aim of academic development is to transform teaching and learning in positive ways, then evaluation should align strategic aims and values with practice through qualitative analysis rather than producing numbers or trying to link cause to effect in an impossibly complex web of relationships. The challenge is to identify the influences on shifting practice and communicate them in acceptable form.

Transformation is by nature a difficult concept to measure. It implies change from a known state to one that can only be described at the level of principle. An initiative supported by the Scottish Funding Council (SFC) for Further and Higher Education described the key principles of transformational educational change as follows:

This definition guided institutions planning transformation initiatives as part of a national quality enhancement plan and demonstrates the fluid nature of the goals involved. With reference to funding removed, it offers a useful basis for evaluating academic development work. The following examples describe evaluation initiatives at sector, institution, programme and practice levels to demonstrate ways in which the wicked problem of evaluation might be addressed.

The Quality Enhancement Framework for Learning and Teaching (QEF) in Scottish higher education is a sector-wide policy initiative that aims to rebalance the twin concerns of quality assurance (QA) and quality enhancement (QE). One aim was to shift emphasis from ‘objective measurement’ of outcomes to focus on effective practices and a developmental view of quality (Gordon & Owen, 2006). The desired outcome was changed culture in the sector, visible as transformed practices within institutions and aimed at enhancing the student learning experience. Two of the other three cases featured in this article took place within the policy context of the QEF, with the following principles underpinning cultural change:

It was assumed that the Scottish higher education sector is small enough for each of its 19 institutions to serve a niche market and, therefore, that collegiality would not come into conflict with a need to compete. It was also assumed that institutions would be willing to share experiences and ideas to enhance practice across the sector. While circumstances always differ across contexts, collegiality and sharing of experience are common aims.

To promote consensual development, the QEF was designed to be ‘owned’ by its users, who are both agents of change and subject to change (Saunders, 2009). This is a powerful way to address problems with local interpretation when strategies are tightly defined and imposed from the top. It may also reduce dissemination challenges as collaborative development generally promotes wider engagement (Gunn, 2010). The SFC, the Quality Assurance Agency for Higher Education Scotland (QAA Scotland) and all higher education institutions were involved in co-creation of a framework. This generated a sense of shared ownership and recognised that enhanced practices are context dependent within institutions, where actions align with social context, culture and practice (Trowler, Saunders, & Bamber, 2009). Initiatives were linked at sector level through enhancement themes designed to engage stakeholders and stimulate activity at local level. This encouraged collaboration in many ways but also made enhancement at sector level hard to measure as the same framework brought about changes in practice and culture that differed widely across institutions.

Representing impact across a sector resists attempts at objective measurement. The QEF was intended to facilitate rather than constrain, so each institution was free to develop its own understanding of enhancement and associated activities. Cultural changes associated with enhancement are best understood in terms of relationships and processes as institutions interact with each other, with professional bodies, QAA Scotland and the UK Higher Education Academy (HEA). Thus, changes at institutional and discipline levels are framed by complex, networked relationships.

It was difficult, therefore, to do a sector-wide review of enhancement. The collective and collegial nature of the framework made it hard to attribute cause and effect where cases of transformation were identified, so understanding of what works and what does not was unlikely to be achieved by conventional means. Rather than seeking objective measures, the SFC commissioned two evaluations (Saunders et al., 2006; Saunders et al., 2009) to focus on the values underpinning the QEF and how well activities within the sector aligned with them.

Culture change was explored through practice and discourse. There are multiple stakeholders, subcultures and visions of enhancement activity within an institution. The evaluations explored the extent to which different views could be combined into a coherent vision and looked for evidence of reflection and reviews of practice. Methods for evaluating cultural change emerged and two approaches were involved: utilization focused evaluation(Patton, 1997) and theory based evaluation (Fulbright-Anderson, Connell, & Kubisch, 1999). Utilization focused evaluation looks at the needs of those commissioning evaluations along with other stakeholders and produces results that are useable by all groups. In this case, the evaluation model and tools were put together and used in consultation with stakeholders (Lent & Machell, 2011). Theory based evaluation helped to articulate the theories of change embedded in the QEF. It promoted understanding of adaptations that occurred as policy was put into practice and of how it changed as it moved through the sector and institutions. Combined with utilization focused evaluation, it allowed a testable framework to emerge. In the case of the QEF, this related to the values and broad categories of practice that stakeholders associated with positive cultural change within the sector and its institutions.

Common practice was accepted as a key indicator of cultural change. This included ways of thinking and writing about quality and how the stakeholders’ day-to-day practice reflected characteristics of the enhancement approach. It also included practices undertaken by institutions, departments or schools and course teams along with sector-wide systems associated with the strategy. The focus on practice led to a largely qualitative evaluation approach (supported by quantitative surveys) based on interviews and focus groups with stakeholders ranging from national policymakers, senior university managers and administrators through to teaching staff and students. Discourse was used as a proxy for practice, so judgments were inferred from how respondents described experiences of learning and teaching. These judgments were based on perceived alignment between practices and values described by respondents with those identified and associated with a culture of enhancement. So, for example, staff plans to revisit activities in light of changing institutional priorities aligned with the QEF aim to promote a reflective culture, and evidence of devolved responsibility for quality enhancement themes and activities was interpreted as a sense of shared ownership (Centre for the Study of Education and Training [CSET], 2008).

The Scottish higher education sector, like others, is a place where multiple policy and practice strands come together, so it is difficult to attribute changes directly to the QEF or any related initiative. However, changes were viewed in terms of the degree to which they align with the values espoused by the framework. This allowed a diverse range of practices that emerged within the sector and its institutions to be aligned with the values of the national framework as well as with specific local needs.

The sector-wide focus of the previous case goes some way to explaining how the focus of evaluation and useful forms of evidence vary according to target audience. Within an institution, it is also vital to provide information that engages the perspectives of different stakeholders. These may be academic staff seeking to understand the value of innovative teaching approaches, students wanting reassurance of the relevance of collaborative learning activities or senior managers trying to justify continued investment in academic development centres. The example in this section reflects the experience of reporting on technology-enhanced learning at Glasgow Caledonian University and highlights the tension between the demand for quantitative data and the need to demonstrate a positive impact on learning and teaching.

Glasgow Caledonian University has a strong widening participation agenda. Use of technology is integral to the institutional vision and learning, teaching and assessment strategy. Blended approaches present an appropriate mix of face-to-face and technology-enhanced learning activities in the curriculum. Blended learning is co-ordinated by a small central team and supported by learning technologists spread across the three academic Schools.

The underlying model for all academic development at the University is distributive leadership. Influenced by the Australian Faculty Scholars initiative (Lefoe, Smigiel, & Parrish, 2007; Lefoe, 2010), this approach traverses traditional hierarchical structures to empower individuals and teams at all levels to act as opinion leaders and change agents – in this case, to develop scholarship and innovation in learning and teaching (Creanor, 2013).

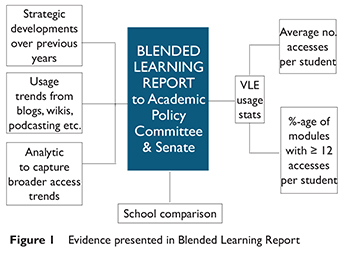

While the underlying model has senior management approval, hard facts and statistics are still the primary evidence required for reporting purposes. An example is the annual report from the blended learning team to Senate, the committee responsible for academic governance and quality issues. The report highlights new developments and levels of use and offers sector comparisons. A range of quantitative data is presented, often in graphical form, along with a reflective account of progress over the previous 12 months (Figure 1).

Due to the nuances of individual module structures and the nature of the analytics processes, statistics do not present a complete picture. Frequent caveats in the report emphasise the fact that they do not actually provide evidence of effectiveness in enhancing learning and teaching, e.g.:

The ‘headline’ data should be interpreted with some degree of caution.

[they] do not present a comprehensive picture of blended learning activity

They do not tell us what kind of learning activities students are engaged in online, or how interactive these might be.

(Glasgow Caledonian University [GCU], 2013)

From a senior management perspective, comparison within the sector is an important part of the report. In the UK, the Universities and Colleges Information Systems Association (UCISA) biennial Survey of Technology Enhanced Learning (Walker, Voce, & Ahmed, 2012) provides a ‘state of the nation’ overview of technology use and support across the higher education sector, while the National Student Survey (NSS) commissioned by the Higher Education Funding Council for England (HEFCE) and International Student Barometer (ISB) run by the Graduate Insight Group highlight the student learning experience including use of technology (called virtual learning by ISB). Beyond these statistics, students also have the opportunity to submit open comments and it is often this section that provides more insightful data.

A selection of student quotes is included in the Blended Learning Report (GCU, 2013); however, the real value of qualitative data is in influencing learning and teaching practice where it can be used to encourage the implementation and evaluation of new pedagogic approaches. This is achieved through gathering case studies of best practice, encouraging the formation of networks of interest and hosting cross-university learning and teaching events. Qualitative data can underpin a culture of scholarly collaboration and sharing, in which academic developers play a strategic role.

Despite the limitations of an evaluation that favours quantitative data over the contextualised student experience, positive outcomes include a heightened awareness of technology-enhanced learning and university-wide collaboration on setting future goals to enhance the student experience. A set of recommendations is generated from the report data and the reflective contextual account. Once approved by management, academic Schools and student representatives, these are incorporated into a Blended Learning Roadmap for the forthcoming year. This roadmap focuses on strategic direction, professional development for staff, pilot projects for new technologies and plans for technology upgrades and reviews, with responsibility shared across central departments and academic Schools. Crucially, such an approach to evaluation and reporting reinforces the strategic importance of this key area of pedagogical innovation and addresses “the normally weak linkages between local innovations and institution-wide strategies” identified by Nicol and Draper (2009, p. 203).

The focus now shifts to localised initiatives and how these weak links may be strengthened by meaningful evaluation at that level.

Postgraduate qualifications in higher education and faculty scholarship schemes in teaching and learning are two popular ways to demonstrate strategic importance and ensure the alignment of enhancement initiatives and institutional goals. Such initiatives typically link learning to the participants’ professional context and foster communities of practice across disciplines to break down the silo effect found in many universities (Sherer, Shea, & Kristensen, 2003; Harper, Gray, North, Brown, & Ashton, 2009). This promotes individual career progression as well as a shared agenda to professionalise teaching through accredited qualifications, awards or recognition. The number of accredited courses and pathways into them has grown rapidly in the context of national initiatives such as the QEF in Scotland, the work of organisations including the HEA, the Staff and Educational Development Association (SEDA), the Association of Learning Technology (ALT) and the Higher Education Research and Development Society of Australasia (HERDSA). HEA and SEDA offer professional accreditation against national frameworks for quality in learning, teaching and assessment.

Completion of a Postgraduate Certificate in Learning and Teaching, or Academic Practice, is a contractual requirement for many new lecturers. While not universal, the Browne review on the future of higher education in England (Browne, 2010) called for such qualifications to become mandatory for all staff teaching in Higher Education Institutions. The value of such programmes has been debated (e.g. Mahoney, 2011; Chalmers, Stoney, Goody, Goerke, & Gardiner, 2012; Gill, 2012), but the need to ensure academics are properly equipped to teach is widely accepted. It is the extent to which centrally delivered programmes in learning, teaching and assessment can be sensitive to the nature and needs of teaching in specific disciplines that is questioned. The effectiveness of such courses is beyond the scope of this article. However, it is one of many factors addressed in a report commissioned by the HEA (Parsons, Hill, Holland, & Willis, 2012) that recommended finding a more robust way to evaluate the impact of certificate programmes in order to understand their influence on the quality of learning and teaching. One challenge in evaluating the impact of these programmes is the extent to which they can be seen to have a positive effect on the development of individuals, on programme delivery and on departmental and institutional practice where many other influences also exist (Stefani, 2010).

Evaluating taught courses for academics is often limited to standard methods (module feedback surveys, numbers of completions, etc.) that provide little insight into impact on the individual or on wider departmental or institutional activities. While there are exceptions – for example, Sword (2010) describes a robust approach involving the “longitudinal archiving” of evidence of institutional change – such methods are both time consuming and uncommon. Noting the relative lack of published evaluations, Bamber (2010) offered examples of programme evaluations conducted in different institutions, which between them employed a range of data collection methods to good effect.

Standard post-provision methods for evaluation have previously been used at Edinburgh Napier University, which runs two postgraduate programmes for academics. The first is an HEA and SEDA recognised PgCert in Teaching and Learning in Higher Education (PgC TLHE) that new lecturers are expected to complete within two years of appointment as a route to an HEA Fellowship. The second is a SEDA recognised PgCert/MSc in Blended and Online Education (MSc BOE). This qualification is linked to strategic technology-enhanced learning goals and the aim to have at least two accredited online educators in each of eight Schools.

Through the standard method of evaluation, and anecdotally, the programme teams know that both qualifications are well regarded and seen as relevant to academic practice as well as wider individual and institutional aims. There is also evidence of impact from other sources, including the number of alumni who are successful in applying for Teaching Fellowships (an appointment based on excellence in learning and teaching demonstrated by a portfolio). However, the available evidence still provides limited insight into the impact of the programmes beyond completion. Further evidence is required to illustrate the wider and longer-term impacts of the programmes.

The challenge of evaluating the PgCert programmes is being addressed in a mixed-methods study during academic year 2013/14. The evaluation aims to “illuminate the link between developing staff as individuals and the strategic Learning, Teaching and Assessment goals of the institution” (Gosling, 2008). A study, involving participants from the last ten years of the PgC TLHE and the last five years of the MSc BOE, is using a phenomenographic approach with interviews and thematic analysis to explore how the programmes have been perceived and experienced.

Cognisant of the limitations previously noted, the focus of the study is on:

Synthesising qualitative data with other sources, including statistics on promotion of programme participants and non-participants, the study will produce an impact evaluation report for the University’s Academic Standards and Enhancement Committee. Other outputs include a series of ‘good practice’ case studies disseminated via an institutional Learning, Teaching and Assessment Resource Bank (Strickland, McLatchie, & Pelik, 2011) and across the sector. Since there is no established approach for evaluating the impact of PgCert programmes, learning from shared experience in this area is about effective evaluation approaches as well as the impact of programmes on individuals, institutional practice and objectives. Sharing this experience reflects the QEF aim for collegiality around evaluation that focuses on both programme and practice levels.

In a case study describing a project-based approach to e-learning capacity development within a large research university, Gunn & Donald (2010) noted that evaluation permeates all aspects of the work. Using “a systematic process with a significant formative element”, that is, educational design research (McKenney & Reeves, 2012), they found an additional challenge in shifting the common perception of evaluation as something that happens after design and development work is done.

Academic development in e-learning, like other areas, takes many forms and has more and less visible elements. Visible elements include, for example, workshops, seminars, collaborative course development projects and consultations. However, such activities are generally less powerful than embedded approaches – for example, action learning within the context of a collaborative e-learning project – and their influence cannot be separated from other drivers of the transformation of teaching. Embedded activities tend to involve more invisible inputs, for example, expertise to craft a unique design, the judgment of where learning edges are for different team members or the best way to achieve learning goals in the circumstances and for the people concerned.

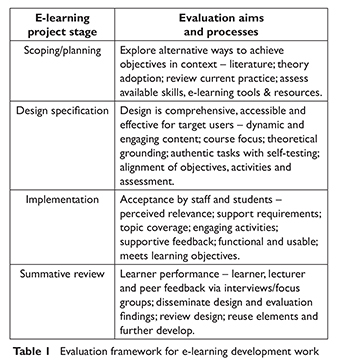

Evaluation of academic development input in this context is additional to evaluation of e-learning project outcomes, and perhaps the most challenging of all. Collaborators are faculty members and colleagues from teaching support departments, who do not come to the relationship expecting to learn anything. The expectation that e-learning staff will do all the work for them is common, yet at odds with capacity building aims. Evaluation of influence can only be approached through qualitative, subjective and medium- to long-term measures, which have yet to be fully implemented. Analytics data is beginning to be used to align learning design intent with learner behaviour and learning outcomes, but this is also at an early stage. E-learning projects use a broad framework with four stages of evaluation (Table 1).

A scoping phase involves negotiation of objectives within project teams. While this is not commonly regarded as evaluation, significant judgment is required to clarify objectives, select a pedagogical approach and determine the best way to implement a blended or e-learning design. Typical methods include literature searches and existing course and software reviews.

Design and implementation are iterative processes where ideas informed by theory are tested in practice and refined as required. Usability testing, student surveys and focus groups, informal feedback and interviews are common methods. Evaluation at this stage is still largely qualitative and part of a formative process. Summative reviews typically track use and learning outcomes through quantitative methods such as student surveys, grades and course evaluations.

The main challenge for summative reviews is to evaluate the impact on teaching and learning without being able to isolate any particular cause of observable outcomes. Impact evaluation is time consuming and requires crafting to produce meaningful results. Unfortunately, it is often reduced to a few questions in a course evaluation survey. Educational design research is ideal for the purpose as it supports theory adoption and testing, reflection on experience and additions to a growing body of knowledge. However, it also presents challenges as ethics consent may be required if results are to be published and faculty who were happy to collaborate on development initiatives may not be ready to engage in detailed evaluation.

The focus and approaches of academic development have shifted in recent years as Gibbs (2013) notes and the four cases in this article demonstrate. The cases outline the nature of the shift and the challenges of representing the value of the work at different levels and for different audiences. The approaches pursued at each level are underpinned by literature on transformation, academic development and evaluation in higher education. They are presented here to foreground the evaluation challenges currently facing this important area of work. The imperative to find more effective evaluation methods is twofold. Firstly, it must provide a sound evidence base for practice. Secondly, and perhaps more compellingly, there is the need to represent the value of the work to senior managers, who are typically not engaged in the discourse but hold power to construct or deconstruct centres and services. A trend of restructuring without knowledge of the value proposition is cause for concern as key relationships and influences may be overlooked.

Based on the experience of the case studies outlined and literature cited in this paper, the authors suggest that the following recommendations will help to address some of the challenging issues identified:

A conceptual shift in some circles from quality assurance and compliance to teaching quality enhancement and positive influence is encouraging. The discourse is shifting from ‘impact evaluation’ to ‘evidence of value’, although the question raised by Grant (2013) remains: Will this shift be brought to the attention of senior managers? Only a continuous cycle of robust evidence which can inform policy, effect culture change and enhance practice will ensure a confident future for academic development and underline its value across the sector. Although this ‘wicked problem’ may never be solved definitively, these recommendations should help to move the various stakeholders towards common understanding of the problem and how the constraints the problem is subject to and the resources needed to solve it do indeed change over time.

Associate Professor Cathy Gunn is Deputy Director and Head of eLearning in the Centre for Learning and Research in Higher Education at the University of Auckland, New Zealand. She has 20 years’ experience of teaching, research and leadership in learning technology in the Australasian higher education sector.

Professor Linda Creanor is Head of Blended Learning in the Centre for Learning Enhancement and Academic Development at Glasgow Caledonian University. She also leads the Caledonian Scholars and Associates initiative, which provides opportunities for staff to engage with innovation and scholarship in learning and teaching.

Dr Neil Lent is a Lecturer in Learning and Teaching at Edinburgh University’s Institute for Academic Development. He has been involved in implementation and evaluation of the Scottish Quality Enhancement Framework in a number of different roles.

Dr Keith Smyth is a Senior Teaching Fellow and Senior Lecturer in Higher Education at Edinburgh Napier University, where he leads the MSc Blended and Online Education and the first module of the PgCert Teaching and Learning in Higher Education.