Amanda Sykes, John Hamer and Helen Purchase, University of Glasgow, UK

Interest in contributing student pedagogies (CSPs) (Hamer et al., 2008), in which students create shared learning resources has grown as we have moved away from ideas of the ‘sage-on-the-stage’ towards a much greater emphasis on student-centred learning. One widely used and studied CSP tool is PeerWise, a student-centred, collaborative e-learning tool that is freely available online. It enables students to author, answer and evaluate multiple-choice questions (MCQs) anonymously, includes options to rate and comment on questions written by other authors, and gain badges and scores for participation. It has been used with a variety of student groups in more than 700 higher education institutions across the globe, and in subject areas as diverse as biological sciences (Bottomley & Denny, 2011), chemistry (Ryan, 2013), linguistics (Green & Tang, 2013), nursing (Rhodes, 2013) and political science (Feeley and Parris, 2012).

Instructors are provided with a number of administrative tools that, amongst other things, enable analysis of students’ participation and provide the option to read and collate student questions and comments. In particular, the questions that student-authors have decided to delete or edit (and the comments associated with them) are always available to instructors. PeerWise is designed to leverage on features of social networking and gaming that students are likely to be familiar with, such as uploading content (in this case, questions), commenting (on the quality of questions), rating (on questions and comments), appearing (anonymously) on leader-boards and (privately) collecting badges. For details see Denny (2013); Denny, Hamer, Luxton-Reilly and Purchase (2008a, 2008b); Denny, Luxton-Reilly, and Hamer (2008); Lutteroth and Luxton-Reilly, (2008).

The literature describing and analysing the use of PeerWise in higher education provides strong evidence of positive learning effects, predominantly within introductory science, medical and engineering subject areas. Previous replicated studies that have shown positive correlations between engagement with PeerWise and students receiving higher grades suggest that there is clear academic benefit to the students in taking part in PeerWise activities (Bates, Galloway, & McBride, 2012; Denny et al., 2008a; Luxton-Reilly, Bertinshaw, Denny, Plimmer, & Sheehan, 2012).

A concern often raised about student-authored MCQs is that of quality; the possibility that students will only write questions on easy topics and that the questions they write will be of poor quality due to their lack of expertise in the given field (in contrast, of course, to their teachers who are likely to be specialists). Recent work by Bates, Galloway, Riise and Homer (2014) suggests that students are capable of producing good quality questions that test more than simple factual recall, and this is a replication of work by others who have shown similar findings about quality and topic coverage (see for example: Galloway and Burns (2014); Purchase, Hamer, Denny and Luxton-Reilly (2010)). In an invited front-page blog-post for the PeerWise Community in 2012, Sykes (2012) noted:

if we make our expectations clear, and provide them with good reasons for those expectations, our students rise to the challenge and are more than capable of writing MCQs that are at least as intricate and challenging as the best MCQs we can write on a good day.

In 2004, Fellenz demonstrated that an assessment task in which students were asked to create MCQs, justify all answers and establish the cognitive level the question was testing, resulted in high-level learning. More recently, Feeley and Parris (2012) demonstrated that writing questions using PeerWise can be an effective learning strategy, leading to deeper learning and better recall. Interestingly though, students themselves are much less likely to cite question writing as beneficial for their learning when using PeerWise (see for example: Sykes, Denny and Nicolson, 2011) than question answering. Usage statistics and feedback from students show a strong preference for question answering, often resulting in the assumption that drill-and-practice is the predominant learning mode and that, consequently, the main learning gain from PeerWise is from rote learning.

Our own experiences with PeerWise suggest the situation is rather more complex and interesting and that students engage in more than simple drill-and-practice, regardless of the views they state they value in feedback questionnaires.

By looking at the discussion forums for the questions that students write, we can observe signs of students learning from and with their peers. Significantly, uncertainty is triggered by more constructive discussions, arising (typically) when a question author makes a mistake that the question answerer seeks to resolve, leading to uncertainty-resolution that enhances learning, and this is demonstrated through the subsequent editing and deleting of questions by the author.

We are not the first to suggest that uncertainty can be an aid to learning. Draper (2009) points to the process of resolving uncertainties as a mechanism for the learning gains observed in peer instruction. He suggests that as a learner:

finding yourself with a view on something that clashes with the view of a peer tends to cause you to try to produce reasons to persuade them (and yourself) and to leave an internal marker that leads you to work towards finding a resolution. Peers are more of a stimulus in this respect than teachers because you respect them enough to think they could be right, but you are not at all sure whether they are, whereas teachers tend to elicit unthinking acceptance and so actually tend to suppress deep learning.

He argues that the learning gains do not occur during the task of answering questions, but that the discussion and elucidation of conflict provide the catalyst for these gains. Evidence that this might be true, however, is rare and is only presented in studies with children, rather than in higher education. One such study by Howe, McWilliam, and Cross (2005) suggested that involvement in collaborative work which results in learning gains requires the work undertaken to ‘prime’ children to take advantage of later ‘useful events’ and that this ‘may depend upon […] contradiction’ (p. 89).

This paper discusses the role of uncertainty-resolution in the use of PeerWise in a Veterinary Medicine course and provides an example of how the elements of discussion, debate and ability to edit can enhance students’ understanding of course material.

In 2010, PeerWise was introduced to a first year undergraduate class of 145 students of Veterinary Medicine (specifically, for the course Biomolecular Science) at the University of Glasgow with the intention of supporting and enhancing their learning. This is a cohort of highly motivated and academically gifted students who have gained high pre-entry tariffs in order to be admitted to the programme.

An introductory lecture was used to explain the software and what was expected for the activity. The students were encouraged to create good quality questions, and the nature of good questions was discussed. Students were also made aware that they could rate the quality of their peers’ questions and comment upon them (with the advice that comments and ratings should be both honest and fair) and although students were encouraged to participate in these additional activities, they were told that this was not compulsory.

The compulsory nature of the exercise was deliberately light. Over the course of the academic year, and split over eight deadlines, students were required to author four questions and answer 40, related to the most recent lecture material. The questions were not necessarily to be explicitly related to published learning outcomes, but instead should relate to the subject content more generally. Each deadline for authoring a question occurring two weeks before the deadline for answering ten questions. In return for meeting these participation requirements, the students received 5% of their final course grade. There was no additional reward for participating in the non-compulsory activities (i.e. rating, commenting).

At the end of the academic year PeerWise use by these students was analysed. The results showed a positive correlation between students’ engagement with PeerWise and their summative exam score (as demonstrated in previous studies, Denny, Hanks & Simon, 2010; Denny et al., 2008b). Student perceptions of PeerWise were mainly positive and they recognised its value as a learning tool (Sykes et al., 2011).

Our research further analysed the data collected by PeerWise from this cohorts’ use of the system. In particular, we perform a qualitative analysis of the comments associated with questions to enable us to investigate the use of uncertainty in learning because providing comments was a voluntary activity and is an area relatively unexplored in the literature so far. Our hypothesis is that PeerWise supports more than simple drill-and-practice learning and learning that results from the creation of an MCQ. We suggest that students engaged in PeerWise activity also learn from the controversies that arise through discussion between peers, and that this is evidenced in the comments, discussions, editing and deleting of questions by peers within the system.

The University of Glasgow, College of Medicine, Veterinary and Life Sciences Ethics Committee granted ethical approval for this work.

We analysed the comments the students wrote to their peers about the questions they had authored. It is important to emphasise that writing these comments was a voluntary contribution on the part of these students and that it is in these comments we expected to find examples of disagreement, discussion and learning; i.e. uncertainty-resolution.

Of the 145 students, 89% (128 students) wrote at least one comment on questions authored by their peers, with 15% (22 students) writing more than 50 comments. The maximum number of comments written by a single student was 356, the mean was 30 and the median, 17.

There are three categories of student-authored questions available for viewing as an instructor; active questions (those questions available for other students to answer), edited questions (the original version of a question the author has subsequently chosen to edit before reactivating) and deleted questions (questions the author has chosen to delete). Neither edited nor deleted questions are available for other students to answer but are stored in the system with all attendant ratings and comments from before they were edited or deleted, and it is in these questions and the associated comments, in particular, that we anticipated we would see evidence of uncertainty and uncertainty-resolution.

We performed a qualitative analysis of comments associated with student-authored questions in the repository. Before selecting a sample of questions to analyse, we ordered all the questions by the extent of commenting, to ensure that we did not choose questions that had no comments. While some of the active questions chosen might also have been present in the edited or deleted set, the comments associated with such active questions would be novel because all comments associated with deleted questions moved to the deleted bank with the question.

Each comment contained one or more points made by the commentator. These points were classified by the first rater and then independently verified by a second according the following categories:

Commenter: ‘Good question however would be nice if to get an we explanation don't have to hunt. I know it says lymphocytes on the slide but also says gamma globulins are antibodies ie not lymphocytes, what do you reckon?’ [sic] Author: ‘I agree. I may have misinterpreted what the slide was getting at after I did some further research (thank you google!)... To avoid confusion I just changed that option a bit. Sorry about the confusion!’ [sic]

Some comments made more than one point and so were classified in more than one category. However, where a comment made the same point more than once, it was only placed in one category. For example, a comment such as ‘great question, really fab’ would be classified as positive once, whereas a comment that read ‘great question, I didn’t know that’ would be classified as both positive and learning from.

We analysed a 5% sample of the 798 active questions (39 questions), a 10% sample of the 101 edited questions (10 questions) and all deleted questions with two-or-more comments attached to them (8 questions). In order to ensure that there were comments available for us to analyse, we ordered the questions by ‘most comments’ before taking the samples.

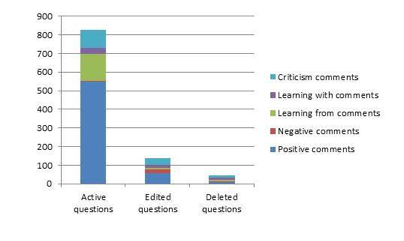

Figure 1 shows the students’ daily activity on PeerWise over the duration of the course and this data sets the context in which students were writing, answering, commenting on and editing questions. The number of questions written during the course form four distinct peaks centred on four deadlines for question creation. Few questions were written after these deadlines. The activity for question answering shows a number of peaks that are not associated with any externally imposed deadlines. There is an initial flurry of interest in the system when it is first available (including the first two question-answering deadlines), then large peaks just before the third and fourth question answering deadlines. Other distinct peaks of question-answering activity can be seen; these coincide with the January and May exam diets.

The volume of comments made follows a similar pattern of activity to questions answered with the initial flurry of activity covering the period of the first two question-answering deadlines. Replies lag behind the comments, as expected.

Figure 1: Useage data

The number of questions written in a day does not exceed 65 at any given peak, whilst the largest number of questions answered in any one day is almost 700. At one peak, the number of comments in a day reached almost 140, a remarkable number given that there was no reward for commenting activity, whilst the number of replies in any one day is low, reaching a maximum of only 11 replies (twice).

Since our focus is on learning through discussion, we have chosen the following typical points (taken verbatim from the comments made on the 57 questions analysed) to illustrate the most important categories of learning from, learning with, and criticism. Positive and negative points were simple statements of opinion by the commenter, and are therefore less useful in demonstrating a learning process.

Learning from point

Commenter: ‘Good question… got it wrong as I was not differentiating between synthesizing and encoding… now I will!’

Learning from point

Commenter: ‘Thank you, i actually didnt get this right i thought the answer was D so you taught me something there!’ [sic]

Learning with point

Commenter: ‘i thought that although a fixed ribosome was already on the RER, the protein was orginally synthesis in the ribosome then passed into the main body of the RER itself, which makes C right, comments anyone? [sic]

Author: ‘Thats a great point! I completely forgot that as it grew it passed into the rER. Thanks for letting me know - now i wont ever forget that point ;) Answer C would then be correct xD woops’ [sic]

Learning with point

Commenter: ‘Nice test, makes you think of what RER and Golgi actually do differently. Could mabye expand explaination to show why Proper folding, and forming di-sulphid bonds and multimeric proteins can only be done in RER (to help make it knowlege not recall): Because the RER is where the polypeptide chain is synthesised (on a ribosome) it has to form it's tertiary/quaternary structure straight away in order to be stable, only then can it be modified for the job it's destained to do, e.g by carbohydrates being added, or cleavage’ [sic]

Author: ‘Thank you for adding this in to your comment! I didn’t know that before but it makes sense that it works that way…’

Learning with point

Commenter: ‘Lipids make up chylomicrons which carry triglycerides across intestine mucosa. The term "large" is relative and I would consider triglycerides large.’

Author: ‘Ok, I'll take that on board and will have another look tomorrow and maybe change it. Thanks!’

Criticism point

Commenter: ‘not fond of the miss spelled answer choices, would like to see similar answers maybe’

Table 1 shows the number of points made of each type, within each question category (active, edited, or deleted).

Table 1: Number of points (or parts of comments) written for each category of question.

|

|

Active questions |

Edited questions |

Deleted questions |

|

Positive points |

547 |

56 |

10 |

|

Negative points |

5 |

20 |

5 |

|

Learning from points |

144 |

11 |

6 |

|

Learning with points |

36 |

15 |

13 |

|

Criticism points |

94 |

38 |

13 |

|

Other points |

52 |

4 |

7 |

|

TOTAL points |

878 |

144 |

54 |

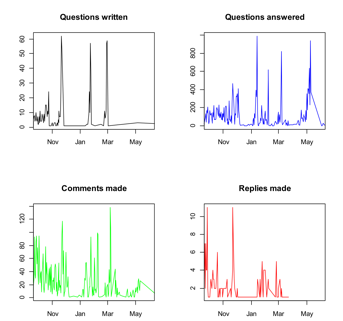

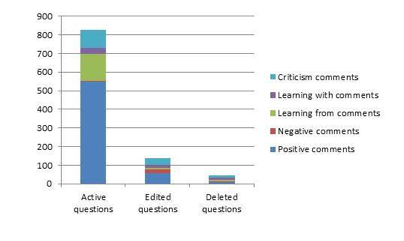

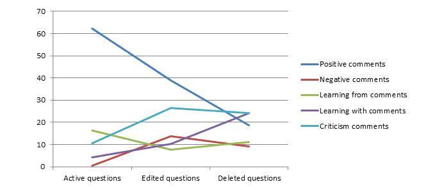

Figure 2 compares the counts of points made within the comments (positive, negative etc.) by question category (active, edited or deleted), and provides an overview of the types of points the students were making for each category of question.

Active questions are dominated by positive points to a greater extent than edited and deleted questions. Negative points are almost absent from the active questions, there is evidence of them in comments on edited and deleted questions.

Figure 2: Counts of comment types by question category

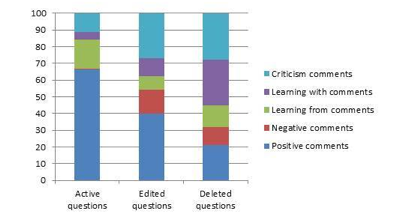

Figure 3 and Table 2 show the proportion of comment category for each of the three question types. This enables observation on the extent to which comments might have influenced authors’ subsequent editing and deleting behaviour.

Figure 3: Proportions of comment type by question category

Table 2: Proportions of comment category for each question type (excluding the ‘other’ category)

|

|

Active questions |

Edited questions |

Deleted questions |

|

Positive points |

66.22 |

40.00 |

21.28 |

|

Negative points |

0.61 |

14.29 |

10.64 |

|

Learning from points |

17.43 |

7.86 |

12.77 |

|

Learning with points |

4.36 |

10.71 |

27.66 |

|

Criticism points |

11.38 |

27.14 |

27.66 |

|

TOTAL points |

100.00 |

100.00 |

100.00 |

Active questions: The majority of comments on active questions are positive in nature and there are proportionally very few negative points; this is unsurprising. The second-highest proportion of comment-type on active questions are those categorised as learning from, meaning that the commenter has learned something from reading and answering the question, and has taken the time to tell the question author so. The next highest proportion of point-type in the active question category is criticism; this seems counter-intuitive. However, we note that there are a proportionally low number of learning-with points in the active questions. This suggests that while commentators might have been critical of questions, they have not given suggestions to the author as to how the question might be improved. It is the presence of these learning with points that change authors’ behaviour (as shown below).

Edited questions: These are questions that a student has written, and then edited and republished (at which point the newly published question becomes active) in response to reflection on the question (possibly inspired by comments written by their peers), and it is in this interaction between students that we suggest that uncertainty, and uncertainty-enhancing learning, is likely. The greatest proportion of point-type here is again positive: these questions have been edited despite getting positive feedback. The next highest proportion of point-type is criticism. These points are not negative (a separate classification, the third highest proportion), but generally suggest flaws with a question that might be fixed. Learning with points (in which the question author learns something from the commenter) are the next greatest proportion. These points show that the commenter has offered a solution, or suggestion, to the problem with the question, which has subsequently been edited.

Deleted questions: These are questions that the author has deleted entirely, rather than edited and republished. The greatest proportion of point-types for these questions are criticism (problems identified with the question) and learning with (in which the author learns from the commenter) which together make up almost 50% of the points scored. Thus, within questions that have been deleted, points that highlight problems with the question, and how they might be fixed, are in the majority, rather than simply points that are negative, and, as with edited questions, it is in this situation we suggest that uncertainty, and learning from that uncertainty, is possible. Negative points make up less than 10% of the points written about the deleted questions, suggesting that students are more likely to try to suggest improvement than to simply be negative about someone else’s work. Again, there are points that are positive and, even for questions that have been deleted there is still evidence of learning from the author.

Figure 4 shows the trend in proportions of different point categories between the three types of question. Of particular interest is the rise in the extent of learn with points from active, through edited, to deleted questions.

Figure 4: Trend in the proportions of comment type by question category

The patterns of activity observed in PeerWise with this cohort of students for questions written and answered are similar to those reported elsewhere; students will write questions to meet deadlines but answer, comment and reply voluntarily beyond these dates (for example in: Denny, Luxton-Reilly & Hamer, 2008). Given that our data shows that these students choose to take time to think about, and provide feedback upon, the questions they answered, a question arises from this: why might a student choose to comment and provide feedback on their peers’ work for no external (i.e. grade) reward? The answers are likely complex and interdependent but we postulate that students wish to invest in question quality, such that the question bank created might be of the highest quality for those times when it is needed for knowledge acquisition and testing. Alternatively, a student might wish to share their knowledge to support their peers’ learning. It is also worth noting that, due to an algorithm built into PeerWise, each student had a participation ‘score’ that increased every time they participated in any PeerWise activity, and they were then able to see how their score related to their peers (i.e. whether they were first or fiftieth) which may also have motivated some students. Whatever the reason, full participation in PeerWise is, at least to some extent, altruistic, in that it is not directly rewarded through grade.

Up to this point, we have talked almost exclusively about the extent and nature of commenting, as shown in our data. We have demonstrated that this cohort of students wrote comments on questions that their peers subsequently took action to edit or delete. We suggested that in particular the comments/points we labelled as learning with, or criticism supported editing the question to improve it and that these types of comments were seen proportionally less often in active questions.

What is not known is why the question authors responded to these suggestions, either by editing or deleting (and presumably re-writing) the question. Students were in no way obliged to make changes to their questions. Unless a question was entirely inappropriate, the teaching team did not comment on it at all, and if they did, it was via direct email, not through the PeerWise interface. Therefore, the only likely mechanism that stimulated a student to edit or re-write their question was the feedback provided by their peers. Thus, the uncertainty arising from critical, learn with and learn from comments often inspired students to act to improve their questions; those students who choose to edit or re-write had learned from this discussion with their peers.

Much has been written about the benefits of peer feedback. For example, Nicol, Thomas and Breslin (2014) suggest that students often perceive the feedback they receive from peers as “more understandable and helpful than teacher feedback, because it is written in a more accessible language” (p. 103). Nicol argues that effective feedback should consist of a dialogue, rather than the traditional monologue transmission from instructor to student (Nicol, 2010) (also see: Topping, 1998; Falchikov, 2004). Sharp and Sutherland (2007) also reported on the perceived value of discussions such as those we are observing. They tasked their students to create five MCQs as part of some collaborative work. Reported feedback from the students suggested that discussing the creation of these MCQs resulted in the most creative and dynamic interactions within the groups of any part of the task they had been set.

We believe we are seeing these mechanisms of feedback and discussion/debate very much in operation, not only in the form of dialogue, but with that dialogue resulting in question authors actively making changes or withdrawing questions in response to this feedback and dialogue. This takes place with no instructor interaction but instead with dialogue occurring spontaneously; that is to say, without prompting or inducement.

So, it is in our observation of feedback on, and dialogue about, question quality between author and commentator that we suggest there is evidence of uncertainty, because it is here that, as Draper (2009) postulated, students as question answerers are trying to resolve the uncertainties created for them by question authors. We believe that student learning is enhanced through the editing or re-writing of questions by question authors, in response to the dialogue around uncertainty-resolution.

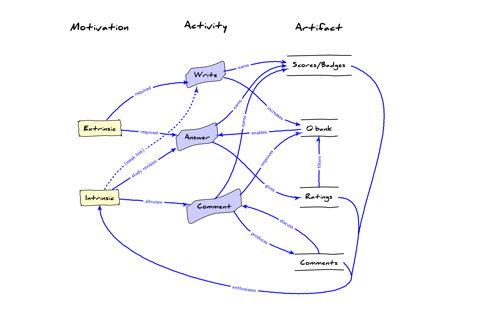

In considering the use of PeerWise beyond simple drill-and-practice, we propose a framework describing the many ways PeerWise can supports students’ learning that incorporate uncertainty-resolution as part of the learning process.

PeerWise involves three activities: question writing; question answering; and commenting on questions, and produces four artefacts: a question bank; ratings of question difficulty and quality; comments; and scores/badges. Students are motivated to participate in the activities and to produce PeerWise artefacts both through extrinsic (e.g. being awarded grades or badges) and intrinsic means. This results in a complex feedback network, depicted in Figure 5. A PeerWise activity starts with writing and then answering questions, often initially motivated by a course requirement imposed by an instructor. As the question bank increases in size, more questions are available for answering, leading to an increase in answering activity. After answering each question, students are invited to give a rating on the quality and the difficulty of the question, and to leave a comment for the author. Ratings assist in the filtering of the question bank, enabling questions to be selected more effectively. Writing comments can improve the question bank and can also stimulate a dialogue between the author and one or more students. Score and badge awards are automatically generated from all activities.

Figure 5: A model of PeerWise learning

Denny (2013, p. 764) reported a strong correlation between the award of badges and answering questions in a large randomised controlled experiment:

the presence of the badges had a clear positive effect on both the number of questions answered and the number of distinct days that students engaged with the tool, yet had no effect on the number of questions authored by students.

In addition to this link between badges and motivation we speculate that receiving positive ratings and feedback on questions written by the student further stimulates this intrinsic motivation.

Our model has several implications. First, it requires an extrinsic motivator to stimulate the creation of a question bank. Although we have created a link between intrinsic motivation and question authoring, this a potential rather than actual link: there is little empirical evidence that shows students are willing to spend the 10-20 minutes necessary to write a question unless they have to. Next, it suggests that both extrinsic (imposed deadlines) and intrinsic (e.g. desire for the benefits of drill-and-practice) factors stimulate question answering. However, dialogue – in the form of feedback comments and replies from question answerers to question authors – can arise spontaneously, with no (direct) need for an external stimulus. We have suggested that this dialogue appears likely to be driven primarily by altruistic and supportive behaviour, and, that uncertainty-resolution arising from this behaviour lead to learning.

The scope of our conclusions are necessarily limited by the extent of data stored in the PeerWise system and we have little knowledge of context (for example, student opinion, or direct quantifiable impact of uncertainty-resolution on student understanding and summative performance or wider impact of the discussion between two students). In hindsight, data gathered by focus-group might have provided some useful contextual student input regarding whether uncertainty-resolution helped to clarify current understanding and/or helped them to learn new information. This study was conducted in Veterinary Medicine, where the content is typically factual at level 1. Subject areas dealing with emotive topics might need more sensitive treatment to ensure that opinions are respected and an academic approach is taken to the discussion and resolution of uncertainty.

However, and if, as our data suggests, Howe et al. (2005) and Draper (2009) are correct when they propose that uncertainty does indeed have a positive impact upon student-learning, then activities that deliberately stimulate uncertainty need to be added to our repertoire of student-centred learning activities in higher education. It is the bottom right-hand corner of our model that represents this uncertainty-resolution that enhances our students’ learning. Our recommendation is that instructors deliberately leverage the potential that uncertainty-resolution offers to student learning. This might include; providing opportunities for uncertainty to arise (through PeerWise or other CSP activity); discussing with students the benefit of clashing with peers and resolving this conflict; asking students to reflect on how they have resolved uncertainty and how this has benefitted their understanding; providing an opportunity for students to share their experiences of uncertainty resolution and what they learned with the rest of the class.

We have demonstrated that PeerWise is an excellent vehicle for investigating uncertainty-resolution and would recommend it, and other CSPs, in researching effective collaborative learning strategies.

Amanda Sykes is a Senior Academic and Digital Development Adviser in LEADS (Learning Enhancement and Academic Development Service) at the University of Glasgow. She gained her PhD in virology before moving to work in academic development.

John Hamer received his PhD in Computer Science from the University of Auckland in 1990, where he served as Senior Lecturer until 2010. He currently works as a commercial software developer, and has an honorary affiliation with the University of Glasgow.

Helen Purchase is a Senior Lecturer in Computing Science at the University of Glasgow. She started her interest in e-learning with a PhD in Intelligent Tutoring Systems at Cambridge University, and, together with John Hamer, runs the Aropä online peer-review system.

Bates, S., Galloway, R., & McBride, K. (2012).

Student-generated content: Using PeerWise to enhance engagement and outcomes in

introductory physics courses. In AIP Conference Proceedings,

1413:123-26. AIP Publishing.

doi: https://doi.org/10.1063/1.3680009

Bates, S., Galloway, R., Riise, J., & Homer. D. (2014).

Assessing the quality of a student-generated question repository. In Physical

Review Physics Education Research 10(2).

doi: https://doi.org/10.1103/PhysRevSTPER.10.020105

Bottomley, S, & Denny, P. (2011). A participatory

learning approach to biochemistry using student authored and evaluated

multiple-choice questions. Biochemistry and Molecular Biology Education,

39(5), 352–61.

doi: https://doi.org/10.1002/bmb.20526

Denny, P. (2013). The effect of virtual achievements on

student engagement. In Proceedings of the SIGCHI Conference on Human Factors

in Computing Systems, 763–72. CHI ’13. New York, NY, USA: ACM.

doi: https://doi.org/10.1145/2470654.2470763

Denny, P., Hamer, J, Luxton-Reilly, A., & Purchase, H. (2008a). PeerWise. In Proceedings of the 8th International Conference on Computing Education Research, 109–12.

Denny, P., Hamer, J., Luxton-Reilly, A., & Purchase,

H.. (2008b). PeerWise: Students Sharing Their Multiple Choice Questions. In Proceedings

of the Fourth International Workshop on Computing Education Research,

51–58.

doi: https://doi.org/10.1145/1404520.1404526

Denny, P., Hanks, B., & Simon, B. (2010). Replication study of a student-collaborative self-testing web service in a U.S. setting. SIGCSE ’10 Proceedings of the 41st ACM Technical Symposium on Computer Science, ACM New York, NY USA, 2010, 421-425.

Denny, P, Luxton-Reilly, A., & Hamer, J. (2008). The PeerWise system of student contributed assessment questions. In Proceedings of the Tenth Conference on Australasian Computing Education, 78, 69–74.

Draper, S. (2009). Catalytic assessment: understanding how

MCQs and EVS can foster deep learning. British Journal of Educational

Technology, 40(2), 285–93.

doi: https://doi.org/10.1111/j.1467-8535.2008.00920.x

Falchikov, N. (2004). Involving students in Assessment. Psychology

Learning and Teaching, 3(2), 102–8.

doi: https://doi.org/10.2304/plat.2003.3.2.102

Feeley, M, & Parris, J. (2012). An assessment of the PeerWise student-contributed question system’s impact on learning outcomes: evidence from a large enrollment political science course. SSRN Scholarly Paper ID 2144375. Rochester, NY: Social Science Research Network. http://papers.ssrn.com/abstract=2144375.

Fellenz, M. (2004). Using assessment to support higher

level learning: the multiple choice item development assignment. Assessment

& Evaluation in Higher Education, 29(6), 703–19.

doi: https://doi.org/10.1080/0260293042000227245

Galloway, K., & Burns, S. (2014). Doing it for

themselves: students creating a high quality peer-learning environment. Chemistry

Education Research and Practice.

doi: https://doi.org/10.1039/C4RP00209A

Green, S, & Tang, K. (2013). PeerWise – experiences at University College London | PeerWise-Community.org. PeerWise-Community.org http://www.peerwise-community.org/2013/09/13/peerwise-experiences-at-university-college-london/.

Hamer, J, Cutts, Q., Jackova, J, Luxton-Reilly, A,

McCartney, R, Purchase, H, Riedesel,, C., Saeli, M, Sanders, K., & Sheard,

J. (2008). Contributing student pedagogy. ACM SIGCSE Bulletin, 40(4),

194–212.

doi: https://doi.org/10.1145/1473195.1473242

Howe, C, McWilliam, D., & Cross, G. (2005). Chance

favours only the prepared mind: incubation and the delayed effects of peer

collaboration. British Journal of Psychology, 96(1), 67–93.

doi: https://doi.org/10.1348/000712604X15527

Lutteroth, C., & Luxton-Reilly, A. (2008). Flexible learning in CS2: a case study. In 21st Annual Conference of the National Advisory Committee on Computing Qualifications (NACCQ 2008). http://www.citrenz.ac.nz/conferences/2008/77.pdf.

Luxton-Reilly, A., Bertinshaw, D., Denny, P., Plimmer, B., &

Sheehan, R. (2012). The Impact of Question Generation Activities on

Performance. In Proceedings of the 43rd ACM Technical Symposium on Computer

Science Education, 391–96.

doi: https://doi.org/10.1145/2157136.2157250

Nicol, D. (2010). From monologue to dialogue: improving

written feedback processes in mass higher education. Assessment &

Evaluation in Higher Education, 35(5), 501–17.

doi: https://doi.org/10.1080/02602931003786559

Nicol, D., Thomson, A., & Breslin, C. (2014).

Rethinking Feedback Practices in Higher Education: A Peer Review Perspective. Assessment

& Evaluation in Higher Education, 39(1), 102–22.

doi: https://doi.org/10.1080/02602938.2013.795518

Purchase, H., Hamer, J., Denny, P., & Luxton-Reilly, A. (2010). The quality of a PeerWise MCQ repository. In Proceedings of the Twelfth Australasian Conference on Computing Education, 103, 137–46.

Rhodes, J. (2013). Using PeerWise to knowledge build and consolidate knowledge in nursing education. Southern Institute of Technology Journal of Applied Research. http://sitjar.sit.ac.nz/Pages/Publication.aspx?ID=120.

Ryan, B. (2013). Line up, line up: Uusing technology to align and enhance peer learning and assessment in a student centred foundation organic chemistry module. Chemistry Education Research and Practice. http://pubs.rsc.org/en/content/articlepdf/2013/rp/c3rp20178c.

Sharp, A., & Sutherland, A. (2007). Learning gains…‘My (ARS)’: the impact of student empowerment using audience response systems technology on knowledge construction, student engagement and assessment. In The REAP International Online Conference on Assessment Design for Learner Responsibility, 29. http://www.reap.ac.uk/reap/reap07/Portals/2/CSL/t2%20-%20great%20designs%20for%20assessment/in-class%20vs%20out-of-class%20work/Learning_gains_my_ARS.pdf.

Sykes, A, Denny, P. & Nicolson, L. (2011). PeerWise - the marmite of veterinary student learning. In Proceedings of the 10th European Conference on E-Learning, 820–30. Brighton UK: Academic Publishing Limited. http://academic-conferences.org/pdfs/ECEL_2011-Booklet.pdf.

Sykes, A. (2012). MCQ writing: a tricky business for students? | PeerWise-Community.org.” PeerWise-Community.org November 12. http://www.peerwise-community.org/2012/11/12/mcq-writing-a-tricky-business-for-students/.

Topping, K. (1998). Peer assessment between students in

colleges and universities. Review of Educational Research, 68(3),

249–76.

doi: https://doi.org/10.3102/00346543068003249