Celine Caquineau, Kirsty Ireland, Ruth

Deighton, Allison Wroe and Kirsty Hughes,

University of Edinburgh, UK

Transition into Honours years is a key stage of development for undergraduate students as they move from the earlier years of their programme with more supported learning experiences to a more autonomous style of learning. Students in Junior Honours (Year 3 of their four-year degree programme) are generally expected to have gained an adequate level of the diverse academic skills needed within their discipline but also to work more independently. The development of key academic skills such as scientific writing and critical thinking is mainly encouraged through essay assignments, where students are asked to research a topic and write an essay discussing their findings. These assignments are ubiquitous to most undergraduate science courses, but it is evident that students in the latter years of their programme can still struggle with having a clearunderstanding of what is expected of them(Hounsell, McCune, Hounsell, & Litjens, 2008; Hughes, McCune, & Rhind, 2013)

In order to help students gain these key academic skills, learning activities and assessments should be designed to help them navigate the language and expectations of their discipline and induct them into the discipline’s own academic discourse(Northedge, 2003). Effective guidance and feedback are also needed to help students gain understanding of what quality looks like in their field and how to monitor and improve their own performance(Nicol & Macfarlane-Dick, 2006; Sadler, 2002). It is well established that improving assessment and feedback practices improves students’ learning (Gibbs & Simpson, 2004), and that effective assessment and feedback practices enhance students’ engagement towards learning(Bloxham, 2007)

The Deanery of Biomedical Sciences (BMS) at the University of Edinburgh has recently undergone a curriculum redesign of its undergraduate programs to enhance the BMS students’ learning experience. At the core of this redesign was the rethinking of the deanery’s assessment and feedback practices, which aimed to both improve students’ academic skills in preparation for Junior Honours and enhance the quality of feedback provided to students. This redesign aimed to achieve a better balance between assessment of learning (summative assessment) and assessment for learning (formative assessment) for all the deanery’s courses, including large undergraduate courses. Focus was placed on developing and implementing courses that recognise and establish assessment and feedback practices as core learning activities, rather than as remedial ‘satellite’ activities happening post learning(Carless, 2015).

One of these newly implemented courses is Biomedical Sciences 2 (BMS2). BMS2 is a large (+250 students) year-long course open to Year 2 undergraduate students enrolled in a biomedical sciences, medical sciences or biological sciences degree programme. BMS2’s main objective is to prepare students effectively for Junior Honours. Students should, as a result of taking this course, have gained a foundational understanding of core concepts and scientific principles in the disciplines of biomedical sciences as well as core competencies in scientific learning and disciplinary practice, such as practical skills, data analysis, scientific writing and critical thinking.

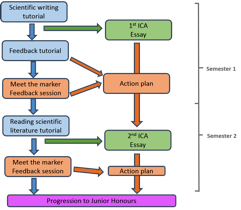

One of the key features of BMS2 is the full integration of the in-course assessment with preparatory and supportive learning activities on scientific writing and critical thinking, and with multimodal feedback and feedforward opportunities (Figure 1). These interactive activities aim to help students to further develop writing and critical thinking skills as well as to better understand what is expected from their work. To ensure that the feedback is effective and that students gain as much as possible from these tasks, feedback is accompanied by appropriate guidance on how to ‘decode’ and how to act upon it(Hounsell, McCune, Hounsell, & Litjens, 2008).

Figure 1: Integrated assessment and feedback practices in BMS2

Interactive small-group tutorials and feedback sessions were delivered throughout the year around each in-course assessments (ICA). The tutorials aimed to prepare students for the ICA by focusing on helping students to understand what is expected from their work and helping them to develop key skills such as scientific writing and critical thinking. Each learning activity built on what was learnt in the previous activities. Students also attended small-group feedback sessions (Meet the marker session) to further discuss with their peers the marker’s expectations. Students were encouraged to reflect and act upon the feedback received by drawing an action plan to feedforward to the next assessment.

This study is a longitudinal investigation over two years into the impacts of integrated assessment and feedback practices on the development of academic skills in undergraduate students, using Biomedical Sciences 2 (BMS2) as paradigm.

1. To investigate the potential impacts of integrated assessment and feedback practices on the development of scientific writing and critical thinking skills in Year 2 undergraduate students.

2. To evaluate whether these impacts are long-lasting by investigating the potential impact of integrated assessment and feedback practices on the students’ academic development and performance in Junior Honours.

This study combined quantitative and qualitative approaches to triangulate findings from two cohorts on the following measures:

Cohort A consists of students who were in Year 2 before the implementation of BMS2. Students from this cohort have taken Physiology 2 and Neuroscience with Pharmacology 2, the two semester-long courses previously offered in Year 2. The other cohort, Cohort BMS2, is the first cohort of students who have taken BMS2 in Year 2.

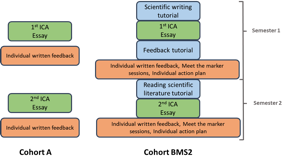

Figure 2. In-course assessment (ICA) and feedback practices in cohort A and cohort BMS2

While both cohorts were asked to write essays on allocated topics and received individual written feedback on their work, students in cohort BMS2 attended additional preparatory tutorials and small-group feedback sessions to ensure the ICA were effective learning activities fully integrated in the course.

To measure the students’ performance in scientific writing tasks, essay marks were collected in two of the Year 2 courses (Cohort A) or in the new course BMS2 (Cohort BMS2) as well as, for both cohorts, in three of the semester one Junior Honours courses. For cohort BMS2, only marks from students who had attended all the tutorials and feedback sessions in BMS2 were used. Marks from a total of 46 students from cohort A and from 32 students from cohort BMS2 were collected.

The students’ perceptions of their writing skills and of the development of these skills were investigated using end-of-semester questionnaires, tutorial feedback forms (Cohort BMS2), surveys and focus group (both cohorts). The survey was sent to students from both cohorts during semester two of their Junior Honours. Data from a total of 111 end-of-semester questionnaires and of 238 feedback forms were analysed for the BMS2 cohort. A total of 30 students from cohort A and 28 students from cohort BMS2 completed the surveys. Three students from cohort A attended the focus group.

Students were asked to comment on their understanding of various topics and skills, such as feedback, assessment criteria, scientific writing and critical thinking. They were also asked to comment on their confidence in their academic ability and confidence in moving to Senior Honours. In addition, students in Year 3 were asked to reflect retrospectively on whether Year 2 helped them to develop key academic skills and prepared them effectively for Junior Honours.

Finally, semi-structured interviews were conducted with 12 members of staff with a range of disciplinary backgrounds and levels of experience. The aim of these interviews was to draw out the staff perspective on students’ academic skills and the new BMS2 course. Themes used to direct the interviews included: quality of academic skills, students expectations, feedback, and improving student skills.

For each cohort, essay marks were averaged for Year 2 and Junior Honours. Mark averages between the two cohorts were compared using a student t-test. Individual mark gains between Year 2 and Junior Honours mark averages were also calculated and compared between both cohorts using a t-test with each student’s Year 1 overall performance as a covariate. Data from the Likert scale questions in the surveys, end of semester questionnaires and feedback forms were translated into percentage of students having selected each option.

Students’ responses to the open ended questions in the surveys were analysed by two researchers to identify key themes to explore further in the focus group. Qualitative data analysis of the student focus group and staff interview data was conducted using QSR NVivo software.

Ethical review was obtained for this study from the University of Edinburgh’s College of Medicine and Veterinary Medicine Students Ethics Committee (MVMSEC).

Students’ performance in essay tasks in Year 2 and in semester one of Junior Honours for both cohorts were compared to evaluate whether the integrated assessment and feedback practices in BMS2 had an effect on students’ marks at each stage. There was no statistical difference in the essay mark averages obtained in Year 2 or in Junior Honours between the two cohorts.

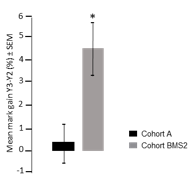

Students’ essay mark progression between Year 2 and Junior Honours was also measured by comparing the gain in essay marks between Year 2 and Junior Honours for each individual student using their overall Year 1 performance as covariate. After adjustment for Year 1 performance, we found that the mean mark gain for students in cohort BMS2 was significantly higher than the mean mark gain for students in cohort A (Figure 3), suggesting that, overall, students in BMS2 improved their essay marks between Year 2 and Junior Honours more than students in cohort A.

Figure 3. Gain in essay marks between Year 2 (Y2) and Junior Honour (Y3) in cohort A and cohort BMS2 represented as mean mark gain (%) ± SEM.

The gain in essay marks was found to be significantly higher in cohort BMS2 compared to cohort A (*p = 0.004, student t-test, cohort A: n = 46, cohort BMS2: n = 32).

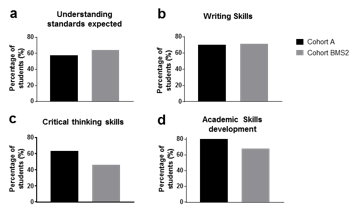

For both cohorts, the majority of students who responded to the surveys and questionnaires felt they understood the standards expected from their work, with slightly more students in the cohort BMS2 than students from cohort A (Figure 4a). Equally for both cohorts, the vast majority of respondents felt confident in their writing skills (Figure 4b).

However, fewer students from cohort BMS2 reported being confident in their critical thinking skills than students from cohort A (Figure 4c). Although the majority of respondents felt confident in their development of academic skills at the end of Year 2, fewer students in the cohort BMS2 reported being confident compared to students in cohort A (Figure 4d).

Figure 4. Students’ perceptions of their skills development at the end of Year 2

Students’ understanding of the standards expected from their work (a), students confidence in their writing (b), critical thinking skills (c) and in their overalls kills development (d).

Overall the feedback received from students on BMS2 was positive. Students interacted well with the newly implemented tutorials and highlighted the positive impact of the tutorials on their understanding of the ICA tasks and development of academic skills. They also appreciated the diversity in the provision of feedback. Some students were also able to see how what was learnt in BMS2 applied more universally across courses and disciplines:

I have learnt much more about the style of scientific writing than I knew before. I feel much more confident now that I know what I’m supposed to be doing! Really useful tutorial, thank you!

As a direct entrant, I have no experience whatsoever in writing a scientific essay. I have learnt lots on the structure and characteristics of a good scientific essay.

How to take away an action plan from disheartening feedback (very relevant as I’d just received feedback on a draft from another subject).

A number of students were more sceptical about the proposed benefits of the tutorials and did not see the link with the ICA. Some found them repetitive or not challenging enough while others would have preferred tutorials on course content over those focussed on skills development:

Very little was given to us about what to write about in the ICA especially since we hadn’t done anything like it before. The tutorial didn’t help at all either

More intense examples! Go to the EXTREME.

Can we not have a tutorial going over what we need to know from the lectures so we can consolidate our learning? This would be a lot more beneficial!

Other students who had taken BMS2 recognised themselves the challenges of getting students to engage with the in-course assessment tasks for the course, as they saw in their peers a focus on marks and a lack of understanding of the purpose of the task:

I felt like a lot of the students got caught up on whether they got a good mark or not without really considering what the task actually was. And that was definitely true when I had spoken to you about the essay. And then there was a group of people who were very much, they weren’t being spoon-fed enough and they weren’t okay with that. Whereas the whole point of the task is to not do that.

These students also recognised that student engagement with feedback processes could be difficult as those who turned up to the feedback events tended to be the higher achieving students rather than those who need more help:

I think often the people who go to the things that are helpful don’t actually need them. And it’s the people who need them that don’t go […] Because I know it is very much the people in our year who were doing well and coping well with it all that went. And I went a few times and felt intimidated by it and didn’t go back for that reason, just because I felt intimidated and stupid.

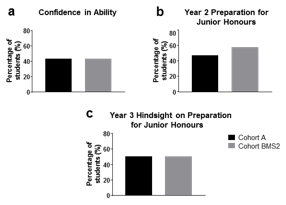

Equally for both cohorts, the majority of students who responded to the surveys and questionnaires did not feel confident in their abilities to do well in Junior Honours, with only 43% of respondents in both cohorts feeling confident (Figure 5a). This suggests that BMS2 did not help students in feeling more confident in their abilities going forward any more than the two previous Year 2 courses.

When asked at the end of Year 2 whether the year had prepared them well for Junior Honours, 57% of respondents from cohort BMS2 agreed compared to only 47% for cohort A (Figure 5b). When asked again during their Junior Honours, 50% of respondents from both cohorts now agreed than Year 2 had prepared them well for Junior Honours (Figure 5c). Interestingly for both cohorts, around 30% of respondents were not able to answer this question as they felt unsure whether Year 2 had prepared them well or not.

Figure 5. Students’ perceptions of their abilities to perform in Junior Honours

Students were asked if they felt confident in their ability to perform well in Junior Honours (a) and if they felt that Year 2 prepared them well for Junior Honours prior to entering Junior Honours (b) and after completing Junior Honours (c).

Qualitative data analysis revealed that students from both cohorts felt the development of key skills necessary for Junior Honours such as data analysis, critical thinking and critical analysis had been ignored or not encouraged enough in Year 2 or even from the start of their degree. There was a feeling that more opportunities to try these skills and ‘fail’ in the earlier years of the course where things did not count towards their overall degree would have helped them feel better prepared to tackle these tasks in the their Honours years.

We’ve barely touched stats, never seen it. And now [in third year] we’re confronted by the fact that we’re examined on it, we’re expected to apply it for our reports are all graded, and some of us just don’t have a clue.

I think reading more scientific papers and critically evaluating them from the very start of our degree would have greatly helped especially in Year 3 when there is a lot of reading to do.

And this year because marks count towards our overall degree mark it’s a bit more kind of like, ‘Oh why? Why have we not been able to do this before?’ And before we could afford to get it wrong.

It became apparent that, for both cohorts, students could not comment on whether they felt well prepared or not for Junior Honours as they had no idea of what they would be expected to do the following year. They did not know what to expect of the standards and demands of junior Honours. Students also reported a lack of communication about the expectations of Junior Honours. They felt they should have been more informed about what Year 3 would entail and how it differs from the expectations of Year 2.

It isn't clear what is expected of us, for example in exam answers in order to get good grades

From what I’ve spoken to people about that they’ve struggled when they got to third year is the fact that our deadlines are quicker, like fast and furious. They’re always there, they’re always coming, there doesn’t seem to be a week where we’ve not got a coursework going on, which is very different from second year.

Some students were able to retrospectively recognise that their skills and understanding of expectations had developed through transition. The students in the focus groups discussed the ways in which they could look back and see how what they had done was developing their skills in different areas, and what skills they would have liked more practice at. This included assessment tasks that match with what they might do in a real world setting.

I think in second year it was critical thinking, like some sort of a magical cloud that everyone was talking about but you didn’t understand what it is. But I think this year [third year] […] it’s getting a little bit less mystical and kind of more everyday-like, in reports and essays. It’s just, I don’t know, it just sounded much more scary in second year so probably something happened, something changed and you saw that you know whether you wrote an essay and got it back and, oh actually okay for the critical thinking, it was good. I did it, I did something right. So I think yeah, I think it developed.

Because as much as essays are very helpful to encourage wider reading and writing, it’s like lab reports and presentations are what you have to do in the real world outside of university. And I think it’s nice to leave feeling confident that you wouldn’t make stupid mistakes in that kind of situation, where you would be able to present something that was off like the general mean standard.

The staff interviewed recognised the variation in students’ abilities and skills. They acknowledged the challenges of balancing the different needs of students within each course. Some staff explained how they did not initially expect this variability and were unaware of the challenge they faced until confronted with it. The staff interviewed welcomed and supported the concept of integrated assessment and feedback practices as a way to help students developing their academic skills:

Getting students to write an essay doesn’t necessarily make them any better at writing essays but if these tasks are supported and there’s a level of teaching how to approach the task rather than just teaching a subject, then that undoubtedly should lead to an improvement and how engaged the students are and how well they can perform and how they can start to align what our expectations as scientists are of graduates.

They appreciated the challenges of implementing such learning activities to a diverse cohort of students and anticipated varied level of student engagement with these new practices. The staff interviewed recognised that helping students to develop key skills requires time, resources, specific type of teaching and teaching staff effective to teach these skills:

If we want the students to get the skills […], then we've got to invest in the people who can actually teach that at the right level.

All staff members interviewed had a clear understanding of what quality feedback meant. They understood the importance of feedback on students’ skills development and discussed limitations hindering the provision of effective feedback in large courses. They also welcomed the use of online marking tools in BMS2 to return feedback more effectively.

I think although it was a bit of a shock to the system this year the system that was used in BMS2 for marking the essays where there was a three tiered feedback approach I think was quite a good one because it did give marks on three different levels.

Several members of staff highlighted that a more global view was required when designing courses and implementing them into a curriculum. Some staff explained that there was little benefit in focusing on the final year and end result. Providing students with lifelong skills would, therefore, be a gradual process, built upon by a range of experiences in multiple courses as part of their full university career:

You’ve really got to think at the end of four years, what do we want the students to have and then, almost work back the way and think how do we get them there?

This longitudinal study of a new BMS course found some evidence that integrated assessment and feedback practices and a focus on key academic skills can have a positive impact on student performance, with students achieving better marks in scientific writing related tasks over time, compared to those who had taken the previous year’s courses.

However, despite this improvement in marks, students in BMS2 felt less confident about their abilities in critical thinking, and did not feel any more confident in their academic skills overall and in moving into their Junior Honours than the previous cohort. This discrepancy between higher academic performance and lower perceived self-efficacy (the beliefs people have in their capabilities to perform a determinate task, (Bandura, 1994) suggests that students were not able to evaluate their abilities accurately. Since anxiety has been shown to affect self-efficacy (Bandura, 1993), the present findings might suggest that with a slight increased awareness of expected standards, and more emphasis made on critical thinking throughout the course, some students in the BMS2 cohort might have become more anxious and doubtful in their own academic skills development and abilities. Monitoring both cohorts’ academic buoyancy and resilience at the start of the study and throughout would have helped establish whether the new integrated assessment and feedback practices directly increased anxiety and thus affected student’s accuracy of feeling of confidence.

Low self-efficacy has been shown to reduce motivation for learning and poorer academic performance in the long term (van Dinther, 2011; Prat-Sala & Redford, 2012). It is therefore crucial to help students to self -assess accurately to ensure they can achieve their best potential. Self-efficacy can be encouraged with effective feedback (Briggs, Clark, & Hall, 2012) which students can use as a basis for self-reflection. Since this study, students in BMS2 are now encouraged to reflect further on their performance, strengths and areas to improve through small-group discussions with their peers. Clarification of expectations around assessment is also key to helping students feel more confident, and one way to target this is through improved assessment literacy and helping students gain a sense of what quality work looks like for different assessment types (Sadler, 2002). Clarifying how past learning activities have helped students in gaining the appropriate skills to successfully complete required assessments should also minimise students’ anxiety and thus promote objective self-efficacy in academic skills.

Additionally, as seen in the focus group and surveys, not all students understood the relevance of the academic skills development tutorials and would have preferred more content driven sessions which highlights the mark-orientated mind-set of some students in early years, and the challenge in conveying the benefits of thinking holistically and in helping students to develop a deeper approach to their learning (Biggs & Tang, 2007). This presents a challenge in developing a student’s understanding of what is expected of them as a self-directed learner who is not there simply to “be taught” (Boud & Molloy, 2013, p. 705). This study also reported that students from both cohorts deplored the lack of preparation in data analysis. Although data analysis is a key component of the Year 2 practical sessions during which students are asked to interpret the collected data, students did not feel confident once in Year 3. Since this study, the development of data analysis skills has been reinforced through a series of learning activities designed to increase students’ theoretical understanding of data analysis, and to give them additional opportunities to put in practice and to discuss what was learnt through specific experiments and problem-solving exercises.

These findings also point to a need to ensure that the expectations of both students and teachers for each year level are clarified to enable efficient transition across years (McCune &Hounsell, 2005). This could be done through exploring with students at each stage of their programme the expectations of what a student in that year should be working towards and the academic standards expected of a pre-Honours vs Honours student. In particular, perhaps by giving students an opportunity to discuss what the Honours years will look like and clarifying what they can expect in terms of learning and assessment activities. This has now been implemented with specific sessions entitled “What to expect in Year 3” at the end of Year 2 and at the start of Year 3. Feedback from the staff interviews suggested that this clarification of expectations might also need to be achieved at staff level so that expectations are consistent across the deanery. Careful consideration of transition points and helping both learners and educators to navigate these transitions effectively could help to improve student engagement and understanding of expectations in each year of the programme (Kift, Nelson, & Clarke, 2010).

This idea leads on to another interesting theme which has arisen from this study, which is that learning activities and assessment in specific courses within a programme of study should be considered in respect of other courses within that programme. Results from staff interviews have highlighted that there is a need for the development of skills over the curriculum as a whole. Further to this, it would appear that students are already receptive to this idea from the written feedback received on skills development tutorials. Students understand that skills developed in one course can be applied to, and built upon, in another course. This is not a novel idea. It has been suggested in the literature that programmes of study should be structured to allow connection of learning experiences across courses (Fink, 2013). Biggs emphasises that this view should also be taken towards quality enhancement across a higher education institution (Biggs, 2011). In addition to improving the skills development of students in a more efficient manner, by maximising the use of both student and staff time, programme-wide skills development is closer to the style of learning that students will likely experience in the workplace after leaving higher education (Reynolds, 2012). This study complements recent initiatives such as the Programme Assessment Strategies (PASS; Hartley & Whitfield, 2012) and Transforming the Students Experience Through Assessment (TESTA; Jessop, 2014) projects which provide plentiful evidence of the benefits on student learning of taking a programme approach when considering assessment and feedback practices. The findings of this study suggest that student skills development would also benefit from taking a whole programme approach. Working to clarify students’ understanding of what is expected of them and taking a programmatic approach to student learning and skills development, would help to consolidate learning experiences across year levels and help students reflect better on their skills development and better evaluate their self-efficacy in academic skills through their degree. If students can see the rationale behind each learning and assessment activities, and that these activities are clearly integrated within different courses into an overall programme structure, they may be more able to recognise their skills development over time. This should begin in the first year, helping students to navigate the transition from school to university (Briggs, Clark, & Hall, 2012), and continue through the later years through the transition to Junior Honours and Honours years. We, therefore, suggest that all learning activities (including assessment, feedback and skills development activities) should be considered from a global programme view rather than in isolation within each course.

The outcomes of this research will inform future assessment and feedback practices in BMS2. They will also inform the deanery overall assessment and feedback strategy at the decisive time of curriculum redesign, potentially influencing the design of numerous courses on the undergraduate programmes. Since this study, the Deanery of Biomedical Sciences has now started a TESTA project of its Medical Sciences programme for which BMS2 is a compulsory course. The outcomes of this project will inform the review of the BMS programme. This research will also contribute to the larger discussions on assessment and feedback happening at the University and beyond. It will hopefully give additional supportive evidence on i) the benefits of carefully designed assessment practices on the student learning experience, and on ii) the importance of considering assessment and feedback practices from a whole programme view rather than at course level.

Taken in isolation, a focus on academic skills development such as critical thinking and academic writing, while improving student marks, may result in lower student confidence in their own abilities and their ability to succeed in Junior Honours. In order to help students navigate key transitions and develop their academic skills effectively, a more holistic programmatic approach is needed which would help students to understand the expectations of their discipline and self-evaluate their own practice.

Dr Celine Caquineau FHEA, is a lecturer in the Biomedical Teaching Organisation at the University of Edinburgh, where she contributes to all aspects of undergraduate teaching (design, delivery, assessment and feedback) in a variety of courses from Year 1 through Honours years. Email: c.caquineau@ed.ac.uk. Specific interests include innovative learning, MOOCs, assessment and feedback.

Dr Kirsty Ireland AFHEA, is a Transcript Editor at the Royal (Dick) School of Veterinary Studies and a Program Developer on Edinburgh Universities SLICCs project within the Employability Team. Email: kireland@ed.ac.uk. Specific interests include reflective learning, MOOCs and creativity in teaching.

Dr Ruth Deighton FHEA, is a lecturer in the Biomedical Teaching Organisation at the University of Edinburgh, where she is the organiser of the Medical Sciences Honours programme. Email: ruth.deighton@ed.ac.uk.

Dr Allison Wroe is a lecturer in the Biomedical Teaching Organisation at the University of Edinburgh, where she contributes to both undergraduate and postgraduate teaching (including design, delivery, assessment and feedback). Email: allison.wroe@ed.ac.uk

Dr Kirsty Hughes FHEA, is a Research Assistant in Veterinary Medical Education at the Royal (Dick) School of Veterinary Studies, University of Edinburgh, Easter Bush Veterinary Centre, Roslin, Midlothian, EH25 9RG. Email: kirsty.hughes@ed.ac.uk. Specific interests include assessment and feedback, e-learning, the student experience and staff development.