Belinda Jane Cooke, Leeds Beckett University, UK

There is ample evidence to show that in order to succeed in higher education, students require the skills necessary to locate, evaluate and use information and resources effectively. Meyer and Land (2003) have listed IL as one of a number of threshold concepts. In other words, IL skills can be seen as “something without which the learner cannot progress” (2003, p. 412). Price, Becker, Clark, and Collins (2011) have argued that the significant advances in technological proficiency of students is too easily confused with IL. Namely, technologically proficient students are not necessarily information literate. IL is a broader concept which includes appreciating the need for information as well as management and evaluation of information as opposed to simply amassing it. IL has been defined as “awareness of how to gather, use, manage and synthesise and create information” (SCONUL, 2011, p. 3) Designing and delivering effective IL learning opportunities needs to focus upon much more than the how of IL and give more consideration to the why.

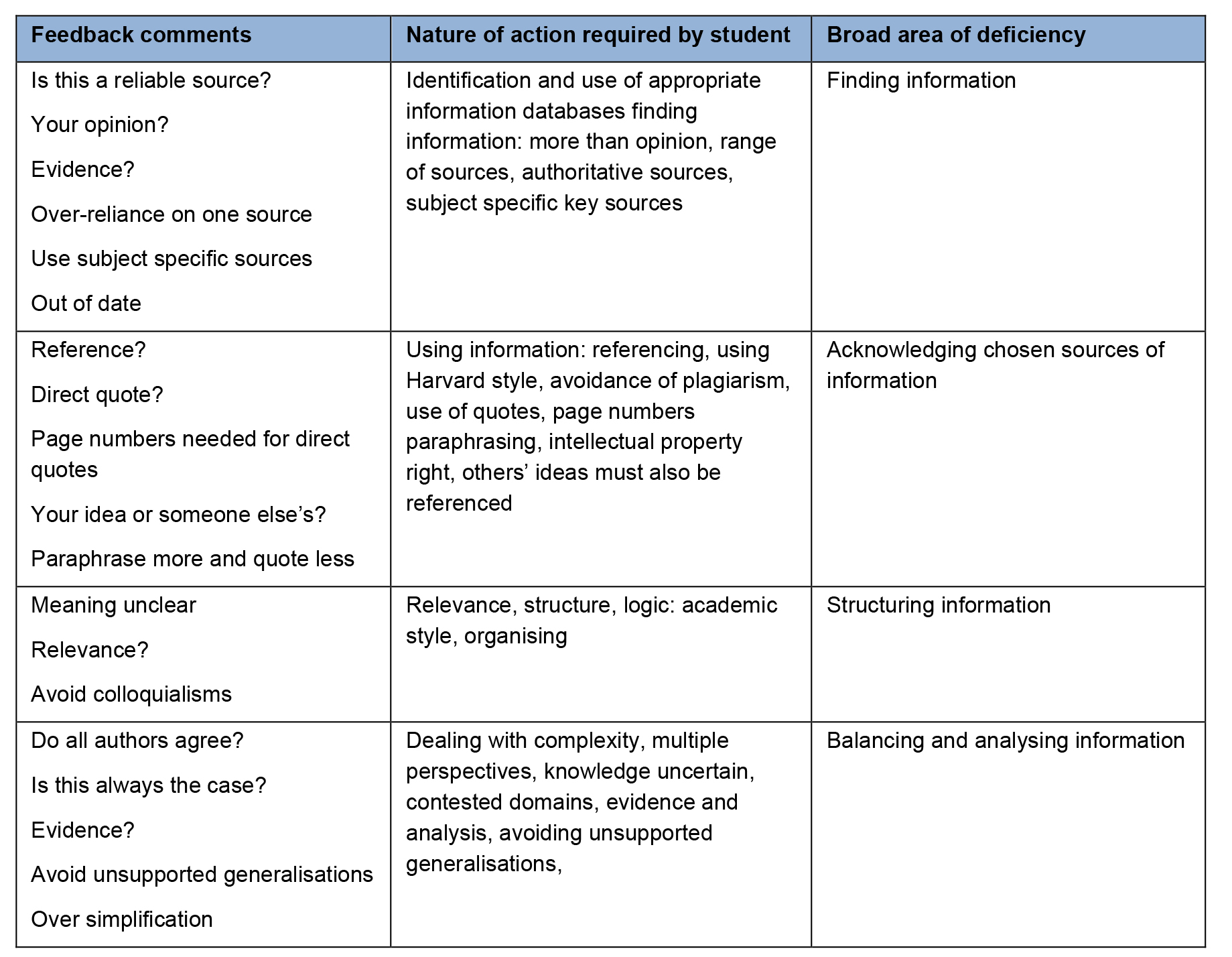

Even a superficial analysis of feedback on first pieces of assessment in undergraduate programmes shows some common and generic deficiencies. Furthermore, experiences of running staff development workshops and hundreds of individual meetings with first year students had led the author to conclude that most of these deficiencies are entirely predictable by staff and had significant implications for the design of first level learning activities and assessment. The rationale for the intervention was clear. We gathered examples of tutor feedback on a wide range of first year work and this revealed some major differences between staff and students’ expectations of what was required in order to demonstrate that assessment criteria had been met. The majority of the feedback related to evidence of deficiencies in information literacy, more specifically to the gathering, use, management and synthesis of information (SCONUL, 2011). A table of illustrative examples is provided below:

Table 1

Understanding what is required for success in university assessments is a key challenge for undergraduate students, especially since student expectations in terms of searching for and using information are usually very different to what is described in the assessment criteria (Smith & Hopkins, 2005). Students are often able to succeed in school or college based assessments without having had to find information for themselves. For example, in our experience, first year students often expect that they will be able to purchase a course textbook which includes everything they need to know in order to succeed in assessments. They are used to a clearly defined syllabus and scheme of work as opposed to the generic learning outcomes and indicative content which are a feature of higher education. Moreover, the types of assessment they have usually experienced often serve to reinforce notions of ‘right’ (and ‘wrong’) answers. In their first summative assessments, new students were not only relying heavily on internet-based search engines but they were also apparently unaware of the need to acknowledge those sources in order to avoid plagiarism (Yorke & Longden, 2004).

In the past and in order to address these key differences in prior learning, our students have had access to a library induction as well as instruction from module staff about expectations associated with assessment. On the course in question, the former usually took place during induction week, often in a large lecture theatre. This approach presented significant challenges in terms of lack of hands-on experience and was always compounded by the fact that students experienced a significant information overload during this week. A change in delivery where we conveyed these sessions as small group ‘hands-on’, lab-based workshops improved engagement but did not seem to enhance learning as much as we expected. This was evidenced by repeated, common weaknesses in first year assessments. It seemed that instruction and support at the time of need (Sadler, 2002) might be a better strategy. However, delaying this support until students were engaging in their first summative assessments (the most obvious time of need) would be too late because much of the independent study required each week also relied on students using their IL skills. Furthermore, several key authors (Sadler, 2002; Gibbs, 2010; Race, 2007) had concluded that formative assessment (that which does not contribute to a final grade) was much more effective in enhancing learning and reducing associated anxieties.

The project involved students and staff on an undergraduate Physical Education degree course with cohort sizes of between 140 and 280 students. The author was course leader at the time and module leader of a first year compulsory Personal and Professional Development module and was working with library staff on drafting an Information Literacy Matrix for Leeds Beckett University based on the SCONUL (2011) seven pillars.

Two preparatory activities were completed which also helped to develop a sense of ownership of the intervention. Firstly, the author analysed approximately 300 pieces of uncollected student work and associated feedback from the administration team. Example comments were noted and shared with staff. They were tabulated verbatim: (table 2)

Table 2

Secondly, course staff (n=23) were asked to read and if necessary add to the list of most common weaknesses which they had identified in the past on the first piece of undergraduate written work. The consistency of staff experiences was notable which led to our observation that, if we can predict this feedback, what could we be doing to help students avoid receiving it and staff avoid writing it? In other words, what type of intervention might we be able to design to improve performance in summative assessment. Discussion and evaluation identified three key findings:

1. Key differences in student versus staff expectation around academic writing (e.g. unreferenced, plagiarised assignments).

2. Perception that there should be a right/model answer.

3. Most of feedback relates to deficiencies in information literacy.

In this way a clear mandate and intended outcomes for the intervention were identified. The next stage was to design the intervention. It was decided to embed IL into the course delivery, rather than run discrete workshops in induction week. The simplest way to achieve this was to start with personal tutors and the associated first year Personal Development Planning module. In order to maximise student engagement, the intervention needed to have a clearly defined student output. For a useful and accessible review of the research evidence on this subject see the work of Gibbs (2010). Our intervention was designed in the form of a low-stakes (formatively assessed) piece of writing in week three of the course. Week three allowed some time for students to settle in but was sufficiently early to allow time for feedback and follow up activities to be completed before the first piece of summatively assessed work. The formative (low-stakes) aspect of the task was designed to reduce anxiety associated with the assessment outcome as it did not affect performance profiles, as is usually the case with summatively assessed work.

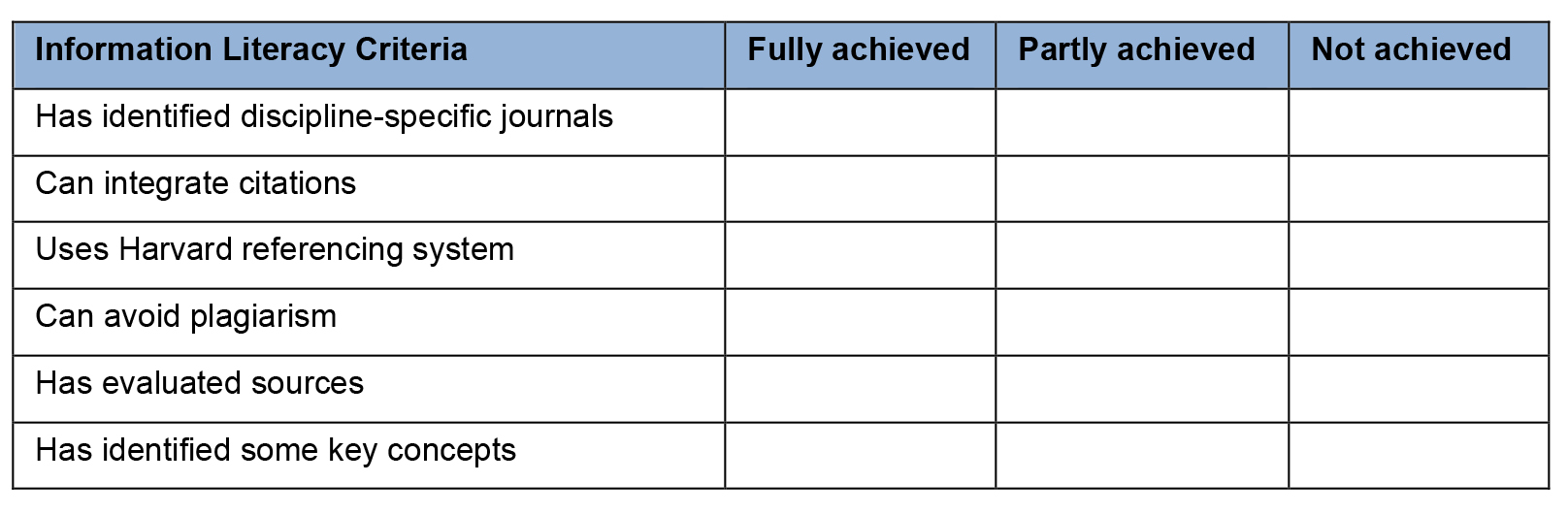

The task was to write a short (500 words maximum) piece which defined the students’ degree subject: in this case ‘What is Physical Education?’. Students were taught IL skills relevant to the task in two, small group sessions with experts from library staff working alongside personal tutors. Supporting resources were also provided. The work was to be submitted in the usual way but assessed three ways: self-assessed, peer-assessed and tutor-assessed using the same marking proforma. The marking proforma was structured based on aspects of the IL matrix and included a broad assessment of the extent to which each aspect was apparent (see extract below, table 3). Differences in identification of IL skills between students and tutors were then highlighted and discussed in tutor group sessions.

Table 3

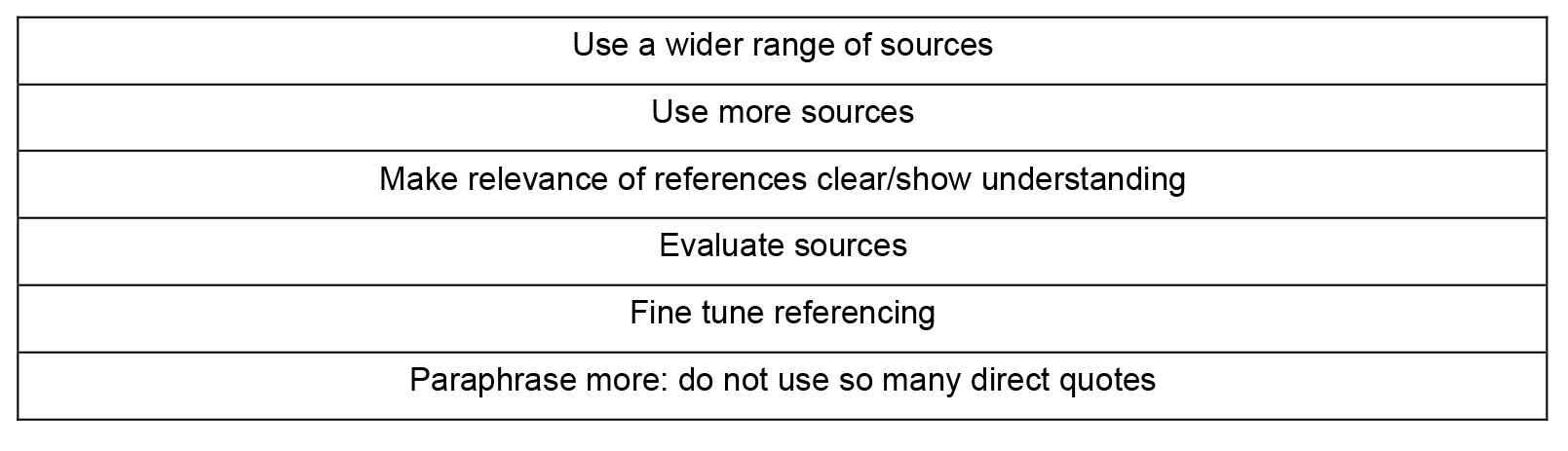

Other key features of the intervention included: sharing and discussing Leeds Beckett University’s information literacy matrix with students, learning to interpret assessment criteria together, development of a student checklist for written work and creating a personal action plan for IL. An analysis of students’ action plans showed that the following actions were most commonly identified by students:

At the end of the first year of implementation, tutors conducted interviews with their students (n=120) about their progress on the course. The IL intervention was identified by every student as being very useful in developing an understanding of what is required in academic writing and especially how to reference sources. Some students reported using the academic writing checklist for other assessments. However, most students suggested that they recognised that they needed to do more work in order to feel fully proficient with finding and using information and they suggested that these skills should be reinforced more regularly in other parts of their course.

A staff development workshop at which copies of students’ self and peer assessment were discussed also included representation from students. Examples of appropriate and manageable follow-up actions were agreed and included improving access to further resources and further review of the intervention as an agenda item at the students’ course annual review meeting.

Staff module evaluations suggested that the assessment tool was easy to use and led to enlightening discussion between students and staff. Staff leaders of other modules noted better performance in terms of range of sources and accuracy of referencing in assessments compared to students on other courses. Better links and dialogue between course tutors and library staff had resulted in improved staff knowledge of IL, and more referrals by tutors of students in need of extra support.

There had been more emphasis on formative assessment as a tool to enhance learning rather than measure it (Boud, 2007).

It is important to note that no additional resource was required to make these changes. We simply made better use of existing personal tutor group sessions. In fact, this approach is actually less resource intensive since, although a small number of students were referred for extra support, it reduced the incidence of multiple individual requests for generic information literacy support from students.

There were a number of key lessons learned. Firstly, the importance of embedding such activities and sustaining opportunities throughout the course. As a result, information literacy work is now linked to specific formatively and some summatively assessed activities in core (compulsory) modules. Secondly, the need for closer working between course staff and academic librarians to produce course-specific support. We no longer deliver separate librarian-led workshops but instead sessions are jointly delivered by course tutors and librarians. Thirdly, the importance of student involvement in the evaluation especially in identifying the need for follow-up activities and support. Fourthly, that linking to action-planning was also particularly beneficial in reinforcing the need for students to take responsibility for their own learning. These last two findings have led to much more systematic use of students’ portfolios to demonstrate their own analysis of their formative and summative feedback from coursework and their related action-planning. These portfolios are indirectly assessed at each level of the course via individual interviews with personal tutors.

Leeds Beckett University has engaged in an institution-wide curriculum refocus which included the embedding of three graduate attributes, one of which is digital literacy. Information literacy is a key component of that attribute and as such has been embedded more widely by design rather than as a ‘bolt-on’ set of additional activities.

Since this initial intervention was implemented we have:

extended the work to other courses using the same materials but with the task contextualised to their degree subject

run sessions for visiting 17 year olds at open days which have included discussion around expectations of degree level study

extended the initial task so that first year students submit an improved and final version for tutor feedback

mapped where in the modular structure these skills are taught, developed and assessed (as part of Leeds Beckett University curriculum review)

started to develop an Open Educational Resource for staff and students based on the student checklist.

1. Map levels of IL across module assessment criteria and marking schemes in order to improve consistency of expectation and show where and how students can build on prior IL learning.

2. Create a checklist for self-assessment of information literacy skills and link to appropriate learning resources on the virtual learning environment.

3. Research the impact of this work more systematically, e.g. by comparing module marks with those of students on other courses which access the same modules.

4. Monitor the number of students who self-refer or are referred by their tutor to dyslexia support following feedback on this activity and, if necessary, secure additional resources.

Findings from this intervention suggest that students’ understanding of the central importance of IL was enhanced but, more crucially, so was their confidence and competence in demonstrating higher levels of IL in assessed work. The timeliness and embedding of the activities were critical to their success, as was the opportunity to develop and practice information literacy skills in a supportive environment where poor performance would not affect degree outcome. Close collaboration between library staff with IL expertise and personal tutors operating in both pastoral and academic capacities was a key feature of the intervention. Both the resources and the design of this intervention are readily transferable to other higher education institutions both for single courses but also as part of institution-wide approach to embedding digital literacy as a graduate attribute.