Simon Thomson, Leeds Beckett University, UK

The purpose of this paper is to explore the potential impact of using e-learning frameworks to engage (academic) staff in the discussions and activities related to technology enhanced learning (TEL) development as part of implementing digital literacy as a graduate attribute at Leeds Beckett University.

There is no discernible literature that currently examines the impact of e-learning frameworks, the wider strategies within which they operate and the effect they have on the transformation of practice through the development of academic staff.

The paper will draw upon examples of e-learning ‘frameworks’ in order to clarify the term and indicate how they are used in relation to TEL development activities. In particular this paper will refer to the use of an example framework in use at Leeds Beckett University through which this phenomenographic study was undertaken.

It is important, firstly, to define the key terms found in this paper. For this research paper ‘e-learning’ and ‘technology enhanced learning’ are the terms used to describe the use of technologies to support and enhance the practices of learning and teaching as identified by Mayes and De Freitas (2013).

In the same way the author seeks to clarify the term ‘frameworks’ which are referred to in this paper. For the purpose of this research, e-learning frameworks are formally documented tools, models and approaches to e-learning development that seek to improve the development experiences for (academic) staff. Blake (2009) identified in her study that staff often did not make best use of the technologies available to them or always recognise the value of technologies with regards to their academic practice.

Example frameworks that have been established to improve academic staff use of e-learning technologies include the 3E Framework developed at Edinburgh Napier University (Smyth, 2013) and the 4E Framework at Leeds Beckett University (Thomson, 2014).

Vrana, Frangidis, Zafiropoulos, and Paschaloudis (2005) provide evidence that both staff and students generally have positive opinions when it comes to e-learning activity. However, the failure of institutions to develop an effective strategy or offer opportunities for staff to engage in e-learning development means that any potential benefits are reduced.

This paper will explore the impact of using e-learning/TEL frameworks to improve academic staff engagement in e-learning development (discussions and activity relating to e-learning) in pursuit of embedding digital literacy as a graduate attribute.

Nicholson (2007) notes that since the 1960s the term and practice of e-learning has evolved in many ways and has no “single agreed definition” (p. 1). However, it was the growth of the internet in the late '90s and particularly improved technology developments in the early 2000s where e-learning in higher education began to become a more familiar staff development activity (Vrana et al., 2005).

However, it was perhaps in the mid 2000s where we saw a strategic shift from sporadic technology use to mainstream implementation in UK higher education (Salmon, 2005). Laurillard (2003) led on a UK government consultation paper titled ‘Towards a Unified E-Learning Strategy’. This paper sought to take “us to a 21st Century education system” (p. 2) with a view to join up the then Department for Education and Skills’ (DfES) objectives across all education sectors. Its ambition was to lead us into a new era of technology enhanced learning (Laurillard, 2003).

It was publications such as Laurillard’s that encouraged UK higher education institutions (HEIs) to examine, more locally, strategic approaches to e-learning use, linking the technology and the pedagogy.

From our 2015 vantage point we can see an emergence of change in the early 2000s with regards to more strategic approaches to ICT activity. Salmon, (2005) suggests that “the introduction of information and communications technologies (ICT) into the world of learning and teaching in universities is now in transition from ‘flapping’ to mass take off thanks to appropriate conceptual underpinnings” (p. 201).

The growth of the virtual learning environment (VLE) made the purchase and installation of a centralised e-learning system a strategic objective of many universities. Salmon (2005) also argued for the need to establish a more strategic approach to e-learning use either as a centralised large-scale activity or more favourably through an ‘incremental’ approach where (academic) staff were encouraged to contribute their knowledge and experiences.

Her paper presented what might be considered the first strategic framework for e-learning for HEIs and was focused on linking more closely the pedagogy and technology to form an e-learning strategy. It was designed to encourage discussion around the pedagogic use of e-learning and move away from the ‘technology-driven’ focus on creating efficiencies and scaling up learning.

Since 2001, the (usually) biennial Universities and Colleges Information Systems Association (UCISA) survey has reported on the specific use of ‘technology enhanced learning’ use in UK HEIs. It has continued to receive an increased response rate to its survey, receiving responses from 96 of the 158 institutions contacted, thus indicating that the strategic use of e-learning has continued to grow since the early 2000s (Walker et al., 2014).

It is interesting too that the survey has seen institutions report a strategic move from the technology focus to one of embedding use to enhance the quality of learning and teaching as part of a wider learning and teaching strategy in institutions (Walker et al., 2014).

What is clear is that a large majority of UK HEs have a strategy related to the use of technology in learning and teaching and have had one for a number of years. This concise introductory overview offers a lens through which we might better understand how the sector has begun to see e-learning as a strategic activity. However, it is indicated that perhaps this approach is not having the anticipated impact originally envisaged and is the focus of discussion in the next section.

As early as 2004 it was recognised that models for e-learning were useful as a way for educational practitioners to connect technology use to their pedagogy but that these were based on the fact that “there are really no models of e-learning per se – only e-enhancements of models of learning” (Mayes & de Freitas, 2004, p. 4).

The past few years have seen the emergence of frameworks developed specifically for the purpose of more effectively utilising technology in learning and teaching. Whereas previous models such as the Conversational Framework, (Laurillard, 1999, 2002) were born out of connecting the evolving (internet) technology to the practice of teaching, the expansion of technology into society at large sees new frameworks that seek to link the use of technology from that which may exist outside education into learning and teaching.

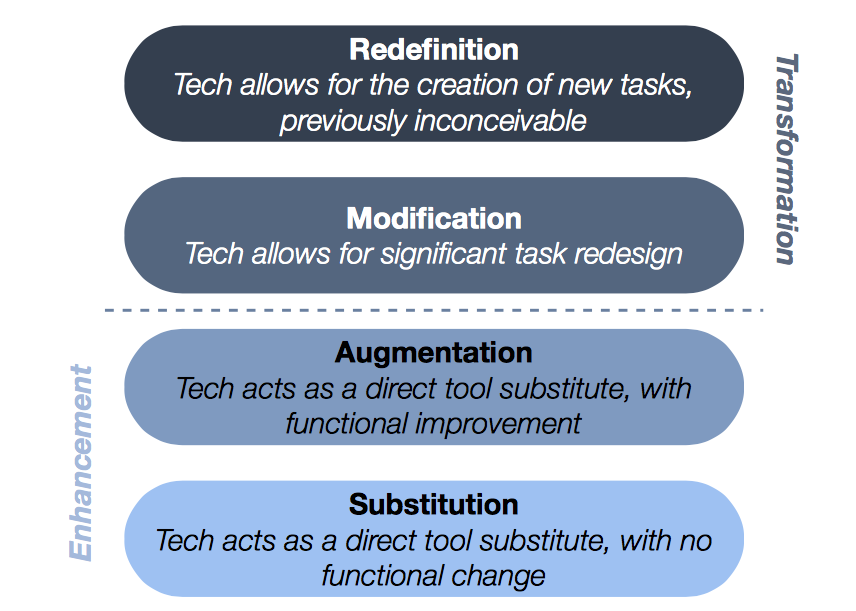

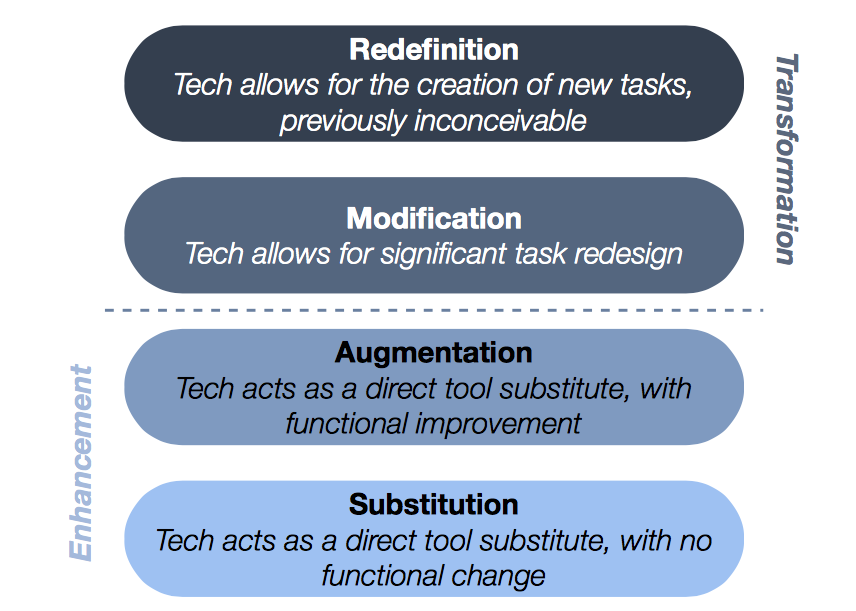

Through the development of the Substitution, Augmentation, Modification and Redefinition (SAMR) model, Puentedura (2006) describes it as offering a method for identifying how technology might impact learning and teaching. The emphasis has shifted from whether technology is beneficial at all, to now making the assumption that it is (Vrana et al., 2005) and how best to implement it in the learning and teaching paradigm.

The SAMR model (figure 1) was originally developed to assist teachers in the progressive integration of technology into teaching. moving from domain one (enhancement) to domain two (transformation). It is useful to note that the model was not specifically developed for higher education, but has been used at a range of education levels including kindergarten (4-6 year olds) through to twelfth grade (17-19 year olds) (K-12) in the United States (Hos-McGrane, 2010) and higher secondary level education in the UK.

Figure 1: SAMR Model. Reprinted from As We May Teach: Educational Technology, From Theory Into Practice, Retrieved March 24, 2015, from http://www.hippasus.com/rrpweblog/archives/2014/06/29/LearningTechnologySAMRModel.pdf by Ruben Puentedura

In this model it is the technology that is centre stage and the impact it has on learners and teachers. It makes the assumption that the teacher already understands their students’ pedagogic needs and in this way increases scope for the model to be applied across a range of education disciplines and levels.

In a similar way the 3E Framework (Smyth, Bruce, Fotheringham, & Mainka, 2013) seeks to encourage academic staff to incrementally develop their use of technology through 3Es:

Enhance: Adopting technology in simple and effective ways to actively support students and increase their activity and self-responsibility.

Extend: Further use of technology that facilitates key aspects of student’s individual and collaborative learning and assessment through increasing their choice and control.

Empower: Developed use of technology that requires higher order individual and collaborative learning that reflect how knowledge is created and used in professional environments.

This is a minimum benchmark framework (Ellis & Calvo, 2007) which is linked to a more strategic approach to institutional e-learning that can also provide a quality assurance measure for the use of technology within an institution. This usually occurs where technology is seen as an enhancement activity with regards to learning and teaching (Quality Assurance Agency, Chapter B3, p18).

The 3E Framework was developed as a practical framework for academic staff to make active use of technology within the modules they teach and, in a similar way to the SAMR model, relies on the academic staff understanding the pedagogic approaches for their teaching. The framework website provides practical examples of the 3Es in use across a range of subjects, with a requirement that the minimum ‘enhance’ activity be implemented across the university.

This openly licensed framework has since been adopted at a number of UK HEIs including York, York St John, Liverpool John Moores and Sussex. In each case, it is used as a ‘measure’ for the use of technology in learning and teaching but with a particular emphasis on the use of the VLE.

As an extension of the openly licensed 3E Framework, Thomson (2014) has extended the role of the 3E framework to a 4E model and focused more on a conversational approach, thus removing the focus on the tools and technologies and aligning it towards a staff ‘change’ process. Thomson (2014) makes particular reference to the “change curve” which considers how staff feel about change and specifically technology adoption and use which is important to the successful embedding of digital literacy. He makes use of the framework to engage conversations and activities related to e-learning at the subject level.

This framework co-exists alongside a centrally developed digital literacy definition (Leeds Beckett University, n.d.) which seeks to place the ownership of the development and delivery at a course level whilst providing strategic support from a central service. A core feature of the framework is in recognising that e-learning change is not a technology-focused activity but one that is centred on teacher and learner interaction (Thomson, n.d.).

This approach is informed by the work of Beetham and Sharpe (2007) who started to reframe the relationship between digital technology and pedagogy towards a learner-focused activity. Laurillard (2007) supports this approach stating that “transformation is more about the human and organizational aspects of teaching and learning than it is about the use of technology” (p. xvi).

The acknowledgement that e-learning is moving from a focus on the strategic use of technology, as discussed in the previous sub-sections, to one that connects technology with the learning and teaching means that we can now explore more clearly the experience of users in the use of such frameworks. This is also the approach being taken in embedding digital literacy. In order to do this effectively we should identify the most appropriate methods for this, which are discussed in the next section.

The intention of this research was to explore the relationship between the use of e-learning frameworks and their effectiveness in engaging academic staff in e-learning discussion and activity to embed digital literacy as a graduate attribute. It was decided that a phenomenographic approach would be taken in order to appropriately capture the personal experiences of the participants.

Phenomenology originated from within and is often utilised to record experiences in a higher education context. Richardson (1999) makes particular reference to its use in examining ‘problems’ in education research, psychology and the wider social sciences. Marton (as cited in Richardson, 1999) is one of the earliest reported users of phenomenographic research and is in relation to students’ individual experiences of a particular activity.

Marton (1986) describes it as being “designed to answer certain questions about thinking and learning” (p. 140) and is predicated on the qualitative approaches in order to record the relational experiences of participants within a particular activity (Limberg, 2000).

Its main purpose is to form a relationship between the subject (person experiencing the event, task, activity) and the phenomenon itself. Marton (1981) describes it as a second order perspective, whereby the researcher focuses on the experience of the subject and how they experience the phenomena.

Limberg (2000) states that the purpose of phenomenography is to “capture the essence” (p. 57) of the phenomena which are recorded and presented through the categorisation of those experiences into an outcome space. Phenomenography has long been associated with understanding students’ learning experiences (Stamouli & Huggard, 2007). It is the environment it was born from and continues to be utilised in, particularly within a European education context (Koole, 2012). However, it can also be used to more broadly record the experiences of participants in a range of phenomena, including workshops and development events.

One of the key aspects of phenomenography is the development of the ‘outcomes space’. Åkerlind (2005) states that this is perhaps one of the least understood areas of the methodology. Martin and Booth (as cited in Åkerlind, 2005) have identified three criteria for measuring the outcome space quality:

1. that each category in the outcome space reveals something distinctive about a way of understanding the phenomenon;

2. that the categories are logically related, typically as a hierarchy of structurally inclusive relationships; and

3. that the outcomes are parsimonious—i.e. that the critical variation in experience observed in the data be represented by a set of as few categories as possible.

The categories and subsequent outcome space are usually drawn from interview transcription but can be drawn from other methods (Yates, Partridge, & Bruce, 2012). The researcher undertakes variational analysis of the transcripts between the subjects and through this process quotes and comments are collated, arranged and re-arranged until narrow categories are identified. This iterative process allows for the researcher to cluster both similarities and differences of the experience. For the purpose of this activity, interviews will form the basis from which to form the categories and the outcome space. In larger scale phenonemographic studies, it can be desirable to have multiple researcher perspectives in order to ensure reliability of the data interpretations. Åkerlind (2005) suggests that two types of checks are possible through this process.

1. Coder reliability check, where two researchers independently code all or a sample of interview transcripts and compare categorizations; and

2. Dialogic reliability check, where agreement between researchers is reached through discussion and mutual critique of the data and of each researcher’s interpretive hypotheses

(Åkerlind, 2005, p. 331)

Phenomenography was selected for this study because of its potential to identify the variation of learning experience (Marton & Booth, 1997) that staff will have undertaking a workshop activity relating to an e-learning framework. This methodology will provide opportunities for the author to consider the effectiveness of e-learning frameworks based on the variation of experiences and the subsequent categorisation. It is anticipated that any such categorisation and development of outcomes space will assist in the development of the framework.

As previously described, phenomenography is a research methodology relying almost entirely on the qualitative interview process. Within the timeframe of the research activity and the availability of all participants for interview, it was decided that purposeful sampling (Palinkas et al., 2013) be applied to the process of identifying four potential interview candidates from Leeds Beckett University. In order to increase the likelihood of interviewees having variance of experience in order to be able to effectively develop categories and then the outcome space, they were selected based on their role within the institution. The framework response data they provided through the mapping exercise as part of the activity (phenomenona) was also used to identify whether they would likely provide validity and reliability (Englander, 2012) through their interview responses.

The potential selection of participants was also limited by the attendees of the activity/event, but it was felt that enough variance would be likely from the selected interview participants identified below. All participants are from Leeds Beckett University and are familiar with the 4E e-learning framework implementation.

Participant one: A long serving senior academic staff member with a school-wide role to lead on the local strategy for the development of staff in TEL. This participant would not only have a perspective of a member of academic staff using the proposed e-learning framework, but may also potentially offer insights into the use of the framework for a wider development plan.

Participant two: A more recent academic staff member and a module leader who has previously attended centrally delivered TEL activities and has a particular interest in developing their learning and teaching activities after completing their Postgraduate Certificate in Academic Practice (PGCAP).

Participant three: A senior learning technologist who works in a central service with a specific role to develop e-learning and TEL activities for academic staff as part of a wider academic staff development programme.

Participant four: A professor of learning and teaching with a role to develop and lead on the University learning & teaching strategy (which encompasses the e-learning strategy).

These participants were all invited to individual semi-structured interviews. Participants one and three were interviewed within one week of the phenomenon and participants two and four within two weeks of the event.

The interviews were audio recorded and then transcribed for coding. All participants had experience of using the 4E Framework activity which is summarised in the next sub-section.

In order for the research subjects to undertake the interview from which to categorise data it was necessary to prepare and engage them in a shared phenomenon. The participants were all invited to a specific module mapping exercise that followed on from previous discussions on a newly-implemented framework.

The purpose of the mapping exercise was to use the 4E Framework (Thomson, 2014) to narratively describe how they were currently using technology in their modules against the 4E descriptor:

The 4Es are: Enable, Enhance, Enrich and Empower and these can be attributed to questioning the use of e-learning in the following ways:

Appendix 2: 4E Framework. (Thomson, 2014). Leeds Beckett University. Retrieved from https://www.leedsbeckett.ac.uk/partners/4e-framework.htm

The participants were presented with the 4E Framework prior to the completion of the mapping exercise and asked to provide a minimum of one example of how they were using technology within their module against one of the 4Es.

Throughout the workshop the participants were encouraged, through discussions, to share their practice and record their use of technology with reference to its impact on learners.

The workshop activity lasted approximately 45 minutes.

Ashworth and Lucas (2000) state that the interview should be seen as a “conversational partnership” through which the interviewees reflect upon their experience of the phenomena. They also give emphasis to the individual nature of the account of the event and for this reason individual interviews were conducted rather than focus groups to avoid any potential cross-contamination of experiences between participants.

Minimal use of pre-determined questions should be made, but where they are made they should be open-ended through which the interviewer can seek to draw out and clarify the participant’s experience.

The following questions were developed in order to frame the conversation for the semi-structured interviews:

The questions were intended to provide the interviewees with a focus on the experience of the activity and how it related to their experience of it.

Gorden (1998) identifies the need to code interview transcripts in order to be able to utilise them effectively in the analysis. Collier-Reed, Ingerman and Bergman (2009) identify the use of multiple researcher coding and comparisons thereof as part of a process to ensure “dialogic dependability” through which categories are constructed and then re-affirmed by other researchers. In the case of this paper and the fact that the author is the sole researcher, the coding of the transcript was directly to indicate potential categories as part of the phenomenographic approach.

Interviewees were invited to review a copy of their transcript prior to its analysis and coding (although none requested to do so).

Through the coded analysis of the transcripts from the four participants (Appendix 3) three categories were identified. Each category represents one way of experiencing the activity that was different from others. In this way the categories help to identify the uniqueness of experiences that the participants underwent as part of the phenomenon.

Interviews were held in quiet locations of the participants’ choosing with a scheduled time of 60 minutes (although none of the lasted longer than 45 minutes). Audio recordings were made and transcribed from which the data was coded, focusing on the experiential comments from the participants. Example supporting transcript statements for each category are presented below where each participant is represented as P1-P4. Despite the small sample the purposeful sampling has provided experience variance from which to identify the categories below.

In this category the framework activity is seen as helping identify in what ways the TEL activities are benefiting learners. Using the framework provides the staff with the tools to be able to explain to students the use of digital tools as part of their learning and teaching experience. This category is focused on the teacher and learner interaction and the practicalities. Its horizons might be considered localised, within a module or course.

“I can see myself using this to talk about digital tools in my module guide. They (students) often ask me why we are using certain tools and this will help me.” (P2)

In this statement the participant is making a connection with the framework and the learner/teacher interaction. The framework in this case has the potential to be extended beyond staff use and into student use too.

In this category the activity is seen as a way to share best practice with staff and tell them what they could be doing with digital tools. The framework is used to ‘label’ the use of technology and software and share those with others. This category is focused on the teacher to teacher interaction, encouraging the sharing of best practice. Its horizon is more outward facing, moving towards an institutional horizon.

“I can absolutely guarantee that there are people doing things I would want to try and copy if only we all knew what each of us was doing, so yeah I thought was an excellent way to use the framework” (P1)

Participant one has identified a desire to want to gather the best practice of others for use in their own learning and teaching. They have indicated that the framework is an opportunity to collate this best practice, map it and share it in an accessible way.

“It provides an opportunity to gather best practice and plan dissemination activity.” (P3)

Participant three also sees the framework as a basis from which to run future activities focusing on the gathering and sharing of best practice.

In this category the framework is seen to be a tool to measure e-learning activity for reporting. It can be used to ascertain current e-learning activity and analysed periodically to show change. This category potentially has the broadest horizon, including senior management teams and potentially an external facing horizon. The data gathering and reporting aspect may well be used as evidence in Quality Assurance Agency (QAA). Although the data might be similar to category two, the nature of its reporting means the potential audience for its use is extended.

“The framework allowed me to consider how we might adapt it to encompass more than e-learning, but also to capture where we are at and plan where we might wish to go, strategically.” (P4)

Here, participant four is considering how the 4E Framework can be extended beyond e-learning to other strategic activities. In contrast to the previous participants this perspective moves the framework from its human-centred design to one that is more strategic.

The three categories can be represented through this visual representation of the outcome space. Figure 2 maps the categories against its potential horizon, but also attempts to draw in potential audiences for the framework data that is gathered through the activity.

Figure 2: Graphical representation of the outcome space, indicating the broadening horizon of the categories.

The intended audiences link to the expansion of the horizon that the framework might have. The smallest horizon is related to internal sharing with a student group (perhaps a module). The horizon in this case is internally focused and only at a module or course level. The next category expands the horizon to a more institution-wide sharing between staff, moving out of the school and faculties and sharing best practice. The final category that informs the outcome space extends beyond the University whereby the framework was considered for use in referring to e-learning activity for external stakeholders such as reporting to the QAA.

If we refer back to the outcome space quality check that Martin and Booth (as cited in Åkerlind, 2005) suggested we can observe the following:

1. That each category is distinctive within the outcome space. In this case the distinctiveness comes from the focus on potential intended audiences for the framework.

2. That each category is logically related. In the derived outcome space we have an inter-relationship between the intended audience and the purpose, but in each case it is the recipient that is the related element.

3. That the number of categories are as few as possible (in this case, three) and these could not be reduced any further. Once again it is the intended audience that defines the categories and the increasing horizon through which the framework could be implemented. Any further reduction in categories would mean a loss in the identified variance that came through the interviews.

The categories suggest that the framework is being used at Leeds Beckett University across a range of audiences and although the 4E Framework was not specifically designed for students, it has the potential to provide a structure for staff to move the e-learning conversations from just being with their peers to also now being with their learners. This is particularly useful considering that the digital literacy graduate attribute is centred around student development. This suggests that discussing the 4E Framework with students can support their own understanding of digital literacy.

This research has shown that despite the initial intentions of a framework, its implementation within the University and its wider usage will be adapted dependent on the user and potential audience. The strength of the framework is that it is used in the best way to support the particular user’s needs. In the same way that digital literacy means something different depending on the subject area, the 4E Framework can be used by both staff and students to improve the embedding of digital literacy across the curriculum.

It is clear that such a framework does have value and this research activity will be used to help inform the continued development of the 4E Framework at Leeds Beckett University and wider implementation of digital literacy as a graduate attribute.

It is important to consider the limitations of this research activity, in order that any implications of it might be considered in context.

Ashworth and Lucas (1998) consider bracketing of the interviewer’s own assumptions and theories to be an important part of the phenomenographical approach. The author has endeavoured to remain detached from his own ‘life-world’ during the interview and analysis stage, but has found it to be particularly challenging during the interview stage as he has pre-existing professional relationships with all of the interviewees.

Every attempt has been made to ensure validity and reliability of the data in the development of the categories. All interviews were conducted using the same trigger questions, audio recordings were transcribed and made anonymous before any coding was undertaken and the transcriptions were also coded twice to improve reliability. However, the author recognises that the interviewees may have also brought a pre-existing relationship with the author to the interviews. In particular, the 4E Framework at Leeds Beckett University is part of a wider e-learning strategy for which the author is responsible. Although the interviewees were encouraged to be fully open with their responses, the author is, of course, unable to be 100% sure that they have been.

In this small-scale study, it has not been possible to implement a reliability check such as those proposed by Åkerlind (2005).

Although there is no prescribed sample size for a phenomenographic study it needs to be of a suitable size in order to gather variance. It was anticipated that the sample size was likely to be small for this research activity and so purposeful sampling was used to overcome this. However, it should be noted that a larger sample size would be the preference for this study and will be a consideration for further research activity.

The purpose of this study was to identify to what extent the use of the 4E Framework at Leeds Beckett University was effective in its intended purpose of engaging (academic) staff in TEL activities and discussions.

This work has provided insight into how the 4E Framework can now be extended beyond the original intended audience(s). These multiple perspectives can be used to better inform the development of the framework and the methods for its effective implementation to support digital literacy as a graduate attribute.

Although the participant numbers were small, the resulting outcomes space can be considered to be valid for the participants involved and can therefore be used to inform a strategy for how the framework can provide a common language and structure through which a range of stakeholders can share experiences and conversations around using technology in learning and teaching.