Paul

Middleditch, Economics DA, University of Manchester, UK

Will Moindrot, Department

of Education, Liverpool School of Tropical Medicine, UK

UK universities find themselves operating in a challenging environment. Domestic students have seen tuition fees rise to meet the £9k cap alongside a reduction in research funding from central government. An increased dependency on student generated revenue, and the need to teach ever larger groups of students on popular courses, finds universities now competing with each other through various rankings to attract and retain students. Attaining positive student opinion has never been so important, with a shifting focus within institutions toward meeting student expectation (Walker, Voce, & Jenkins, 2013).

Through the commoditisation of higher education, students inevitably view education in terms of quality and value for money of the learning programme and wider environment. School leavers carefully assess the costs and value of traditional university programmes over other forms of learning such as work-based, flexible and distance learning. With the advent of the rich communication technologies utilised so well by massive online open courses (MOOCs), young people are finding alternative modes of learning more attuned to their interests and choices of interacting with the world. By seeking out alternative forms of education, candidates are able to distinguish themselves on the job market from others who are fresh out of a more formal education (Stephens, 2013).

By not responding to this fast-moving world, the National Union of Students (NUS), in their 2010 HEFCE report, have highlighted the risk of institutions becoming marginalised, and some have even suggested that universities are acting ‘irresponsibly’ (Bean, 2014). At the chalk face, university lecturers find themselves riding a perfect storm. Lecturers want to employ a learner-centric approach that acknowledges a desire by students to be engaged in new ways and keep in touch with global concerns, but course redesign appears purposefully tortuous and risk adverse. Time spent here also eats into time that lecturers have available for their own research, threatening academic progression criteria. Juggling these opposing priorities is a challenge for seasoned and new lecturers alike, requiring teaching professionals who are student-focused, innovative in their outlook, and with the ability to change and to be able to challenge the prevailing status quo within university management. Further to this, there is also the challenge of measuring the success of the students’ ability to convert the improvements into better marks (Chowdhry, Sieler, & Alwis, 2014).

We present our own developmental journey in which we learn to utilise commonly available tools in our teaching to assuage some of the practical issues aforementioned. These are small incremental steps that lead towards a more learner-centric approach that allows us to be both experiential and confident. We demonstrate positive impact upon student satisfaction, engagement and enjoyment, very much aligned to the re-emphasis of National Student Survey (NSS) scoring on student engagement and collaboration, and that, we suggest, can be a repeatable approach for other lecturers teaching large cohorts. We have borne in mind the constraints that lecturers face and so prioritised innovations with time-saving potential.

The two programmes under study are Macroeconomic Principles (ECON10042) and Macroeconomics IIA (ECON20401), typical large cohort undergraduate units delivered within the School of Social Science, University of Manchester. Cohort sizes have averaged 400 to 600 over the last three years. Both are core compulsory modules for the Bachelor of Arts in Economics programme, and they are also undertaken as modules on a range of other programmes. Teaching has consisted of a ‘traditional’ approach of instructor-led lectures and teaching assistant-led seminars, with assessment in the form of an exam. Abilities across the cohorts vary widely, reflecting the different streams of students taking the units; for some the course will be in their chosen vocation, whereas for others this is a means to an end. Historically, the Economics discipline has suffered from the issues of student engagement and satisfaction. End of unit evaluations revealed that students were discontent with opportunities for feedback, some suggesting that there was a feeling that they were ‘just a number’.

In 2009, one of the authors, Paul Middleditch, was appointed as a teaching-focused lecturer to take over the Macroeconomic Principles and Macroeconomics IIA modules. Whilst the syllabus was based around a key text, and the overall structure of the course was initially maintained, there was a desire to make use of a new teaching tool in the form of a classroom response system as one way to overcome the problems associated with large cohort teaching. Together with support from a faculty learning technologist, Will Moindrot (also author), we began to use TurningPoint ‘clickers’ in lectures to allow students to interact with lecture presentations through answering multiple choice questions embedded within PowerPoint slides. This presented the opportunity to explore how new teaching methods could have a positive benefit upon the student experience. We initially employed the clickers within revision lectures, presenting exam style questions for students to test themselves on. But through partnership we have been able to construct a repertoire of approaches for using this tool in innovative ways. One unique aspect of this project then is the innovation which presented itself from collaboration between an academic and a technologist, an unexpected consequence.

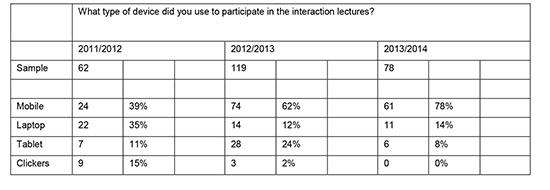

By 2010, the logistics of transporting large quantities of clickers on a weekly basis had become burdensome, and so we explored alternative response systems that would allow students to use their own devices instead of clickers. We settled on TurningPoint ResponseWare for its interoperability with the University’s existing clicker technology, presenting an easy to use inclusive solution. Largely due to the technological tidal wave, we have seen a year-on-year increase in the use of student’s own web-enabled devices. In table 1 below, we report the types of devices used by students to interact during lectures. It is interesting to see the sharp increase of mobile phone use reflecting the fast changing technological landscape and the fall in the numbers of clickers required, something we have termed the technological tidal wave. In this paper, we refer to this technology more broadly as classroom response systems (CRS) to denote our shift from ‘clicker’ technology specifically.

Table 1 Survey results measuring the types of devices that students used to interact doing lectures 2010–2013

The use of students’ own mobile devices meant that we were able to use the technology more frequently, and, as a result, we have had greater opportunity for practice and refinement of our pedagogical methodology. Also, having a close collaboration between a convenor and learning technologist has enabled a ‘hot-housing’ of approaches, where one or the other can follow developments within education, offer mutual support, and collaborate towards iterative experimentation leading to a train of innovation. Spending time developing teaching strategies in class has meant that we are more attuned to evaluating our overall teaching approach and to considering individual elements within a holistic teaching approach. It has also revealed wider benefits around the student social value and increased agility of teaching practice, not immediately apparent upon first taking up this tool. Through collection of data we have found further gains in providing students with a positive learning experience through the continued use of the voting system. The purpose of this paper is to highlight how approaches we have used correlate to increased levels of student satisfaction, engagement and enjoyment.

There are many reasons given in the literature as to why lectures are not ideal venues for learning: class size and environments that are inhibitive towards participation and involvement (Draper & Brown, 2004); ingrained passivity leading to mindless note-taking and postponement of understanding (Kolikant, Drane, & Calkins, 2010; Draper & Brown, 2004; Crouch & Mazur, 2001); absence of feedback or personalisation of learning to the student (Kolikant et al., 2010); minimal or biased feedback loop to the lecturer (Draper & Brown, 2004; Boyle & Nicol, 2003). The lecture is the oldest continuous form of instruction within universities (Laurillard, 2013), and despite deficiencies its favoured status is strengthened within the £9k era as a form of providing contact teaching “to the largest number at the lowest cost” – see Shulman (2005) for a discussion.

Bligh (1998) suggests that lectures are of average effectiveness in transmission of information (in comparison with written texts and audiovisual media), and that teaching which promotes active thought through ‘buzz groups’ or seminars is more fit for purpose. Following the constructivist view, there is now widespread recognition that deep and lasting learning is most likely to occur in environments that place students within an active role (Draper & Brown, 2004). Laurillard’s (2013) conversational model demonstrates how dialogue occurs within education, between student and teacher, and most lecturers will make attempts to vary lecture delivery to include informal questioning in order to position students within an active role.

Cutts, Kennedy, Mitchell and Draper (2004) argue that Laurillard’s conversational model is based on one-to-one interaction, and Perlman-Dee (2014) finds room and cohort size, and even timetabling, restrictive to the degree to which traditionally structured teaching can move towards a student-led approach. Lecture rooms are inhibitive towards outward visible student participation (Shulman, 2005), and discouraging for lecturers trying out new things. It takes a confident lecturer to move away from the security of ‘chalk-and-talk’, and a syllabus-centric approach, towards creating opportunities for student engagement, particularly now that they are so weary of being negatively reflected upon. So what can be done to facilitate the introduction of increased dialogue and activities in lectures?

CRS (also known as clickers, voting pads, personal response systems [PRS] etc.) are a tool commonly promoted to lecturers looking to increase interactivity within face-to-face teaching (Simpson & Oliver, 2007). Koenig (2010) suggests that there are affordances inherent in CRS that make their use of interest within teaching. Here is a collection of those benefits from the literature:

O’Donoghue, Jardine and Rubner (2010) suggest the existence of a hierarchy of CRS use – a spectrum in which some instructors use CRS for surface level quizzes (which they argue can eventually lead to loss of interest), whilst at the other end CRS being used to facilitate dialogue-rich discussion activities such as peer instruction (Mazur, 1997). This is a teaching approach that makes use of structured questioning which Crouch and Mazur (2001) regard as having the potential to engage every single student through discussion rather than only “a few motivated students” as found with informal question asking in a lecture. Crouch & Mazur (2001) set out the process thus:

1. Short presentation delivered by the tutor around a subject or concept.

2. A question posed to students which they answer individually and then they report findings to tutor.

3. Students invited to discuss in small groups their answers and reasoning to try to reach a consensus.

4. Students return to the original question and report findings to the instructor.

Crouch & Mazur (2001) found that multiple choice questions are the form of student response most manageable for large cohorts, and, furthermore, Bruff (2009) finds that CRS are particularly suited for supporting and augmenting discussion activities, allowing confidential response by students. Draper and Brown (2004) find fast and accurate collation of data into an on-screen graph is another affordance of CRS beneficial to this type of activity.

As with any tool, practice refines practice and Crouch and Mazur (2001) document a 10-year period of continued gains in student grades, which they attribute to their better grasp of the pedagogy surrounding discussion tasks. Draper and Brown (2004) suggest that even through modest CRS use, for example by spending time developing good question sets, there are opportunities to reap increased benefits to teaching and learning.

More broadly, the literature describes a transformative process underlying the technical refinement, in which lecture environments move from silence and passivity towards dialogue and interaction (Crouch & Mazur, 2001; Boyle & Nicol, 2003; Cutts et al., 2004; Draper & Brown, 2004; Gauci, Dantas, Williams, & Kemm, 2009; Koenig, 2010; Kolikant et al., 2010; Broussard, 2012). Kolikant et al. (2010) suggest that as instructors employ CRS, and reopen channels of communication, it becomes in their interest to find further ways to encourage students to step out from their anonymity. The evidence suggests that clicker use provides a very gentle lead-in towards adoption of a more learner-centric approach.

Our motivation to adopt the social media platform Twitter came from the unexpected enjoyment of a built-in anonymous communication tool in the classroom response system. However, there is an emerging literature on the use of Twitter in the practice of higher education, most notably Junco, Heiberger and Loken (2011), who provide evidence from a controlled study that Twitter can be used specifically as a pedagogical tool. The control group in the study became more engaged and attained higher grade point averages. Following this, Junco, Elavsky and Heiberger (2013) suggest that the designed adoption of these virtual engagement tools can facilitate student collaboration and better educational outcomes. On the contrary, Kassens-Noor (2012) make comparison of Twitter as a teaching practice over the more traditional learning methods. The findings add caution that whilst Twitter can be a powerful outside of class teaching medium it can also hinder where the material requires students to be self reflective. Graham (2014) also suggests that even though the use of Twitter increases participation and engagement outside of the classroom, the design of an incentive might be necessary to facilitate the full benefits of the technology’s potential.

Generally, the literature suggests a number of ways in which technology can provide a positive impact upon the student experience. In this paper, we explore how these tools and attendant strategies for use can be beneficial to instructors facing the challenge of adopting new teaching methods whilst upholding levels of student satisfaction and positive learning experiences. Our expertise comes from the way in which we have used such systems. The investigation, therefore, takes the form of a case study, through which we explore how specific applications developed are able to provide benefits. As autonomous innovators, we have evaluated our modes of use by gauging student opinion on the themes of student satisfaction, student enjoyment and student engagement. These are metrics which we believe support overall positive student satisfaction in their learning experience. Our evaluations serve to either support or disregard these methods as ‘vehicles’ towards achieving gains in these areas.

We began by using online surveys with students; however, low return rates and also a discouragement by management, fearful of ‘survey-overload’, led us to instead use the CRS to collect quantitative data. Whilst the use of CRS for teaching is well documented, there is little in the literature around the use of this tool for the purposes of conducting surveys. As Bruff (2009) highlights, they can be useful for simulating the collection of data for live demonstration of statistical principles for students, but within the context of research methodology we only found one example. Bunce, Flens and Neiles (2010) used CRS as a ‘non-conspicuous’ recording tool for participants in their study to map out where attention lapse occurs in lectures, and they reported that clickers were a convenient data collection method. Our feeling is that whilst many instructors use such systems to informally collect student feedback, no large-scale research has been conducted for this as a survey mechanism. And yet from our research we have found this to be a quick and efficient data collection technique, with high return rates, a probability sample directly from the population of interest, and facility for standardisation of measurement year-on-year.

In the absence of wider evidence, we can only conclude that data collection through the technological tool itself could present potential bias against a section of the cohort, for our particular research area. However, we have provided students with alternative methods of response (see Table 1), and self-selection is a design limitation of all forms of self-completion surveys. In our move from online survey to a response system for data collection, illustrated in Table 2 below, we have shown that we were able to secure a much higher return rate through this method, thereby reducing non-response error. Sample size is indicated for all questions.

Our evidence here comes from our evaluations using this method with two cohorts from Macroeconomic Principles and Macroeconomics IIA. We can also present data over a number of years of consecutive use, thereby providing a longitudinal study with cohort analysis, which has the potential to overcome problems with attrition, external variables, etc. (Cohen, Manion, Morrison & Dawson, 2007). As this reflects our own development of practice, we present findings of approaches sequentially: classroom interaction was introduced in 2010, peer discussion techniques in 2011 and exploration of Twitter as a communication tool in 2013. Although this prevents us from producing a like-for-like comparison, we feel that the longitudinal analysis year on year presents strongly the developmental nature and enhancement of practice that has taken place. We made use of closed-ended questions aligned to the metrics defined above for achieving a positive student experience. Questions were in the form of a statement with a five-point scale, from ‘strongly agree’ to ‘strongly disagree’, but this has also changed slightly to align the delineation with that of NSS, from ‘agree’ to ‘disagree’. Questions are listed within the results and discussions section.

We present a story of our own pedagogical development as teachers who are refining skill through practice. We have not disadvantaged or put at risk of harm, stigma or prosecution any students through our investigation. Data collected is either collected anonymously (surveys and polls for example) or anonymised at point of collection without the use of control groups. The paper is a secondary evaluation of refinements and reflection of our practice carried out as part of the normal licence of practising lectureships; accordingly, we do not consider that any of the work presented here to have raised ethical concerns.

Below we present the results taken from our surveys for classroom voting aimed at increasing satisfaction, peer instruction aimed at increasing enjoyment and communication aimed at student engagement:

We began using a CRS in 2009, but it was from the 2011/12 academic year that it became a regular feature on both units (Macroeconomic Principles ECON10042 and Macroeconomics IIA ECON20401), and it was from this period that we started to take data for measurement across academic years. Although initially we had introduced TurningPoint ResponseWare as a student satisfaction enhancer, it became clear that the system would help us to identify other pedagogical benefits for economics students. Our key driver was to put students in a position of active participation, providing motivational benefits and giving us a better picture of student comprehension that we could then act upon in explanation. For a comprehensive summary of applications and benefits of this tool see our literature review.

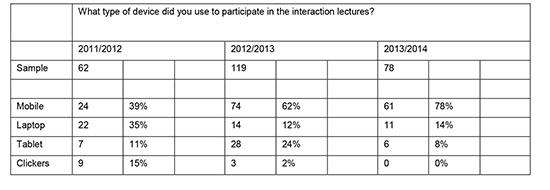

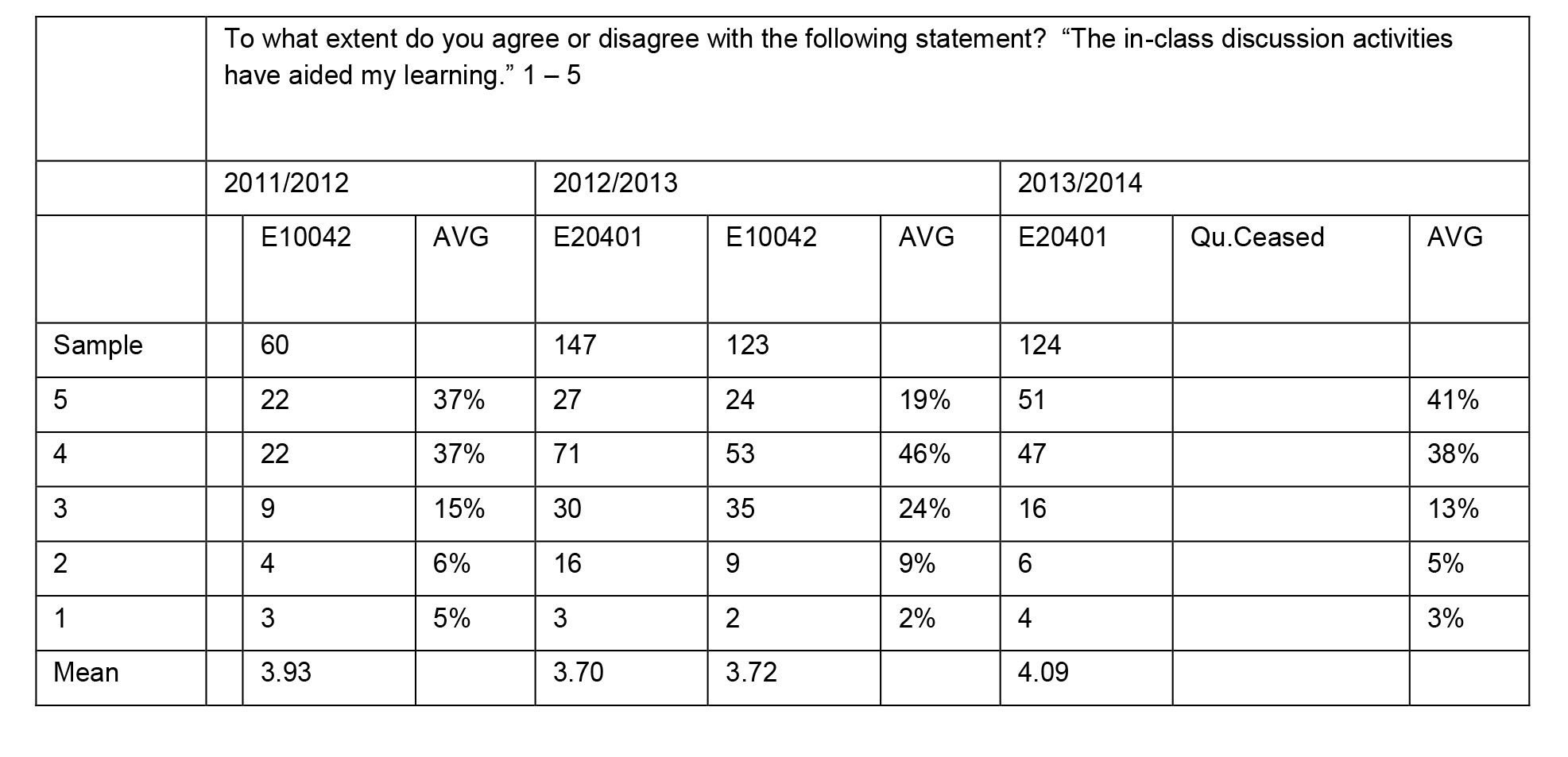

To measure the success of this tool as a satisfaction enhancer, we surveyed students in the last taught session, normally the revision session in which the same system was always a feature. Students were asked to respond to the sort of question they might find in the NSS. The results obtained over the last few years are outlined below in Table 2.

Table 2 Survey results from students exposed to classroom voting technology

The most obvious observation from these results is that the students felt that the use of interaction technology increased their satisfaction with the programme, with between 80% and 91% agreeing with this on the year’s averages. Our highest score in percentage terms came most recently on the course ECON10042 in the spring semester of 2013/14. It is also clear from the annual averages that there is a general improvement across years which can most likely be accounted for by the increase in student exposure to this tool and also our own development. This result is consistent with the research by Nielsen, Hansen and Stav (2013), who found that experienced use increased the satisfaction with student response systems. The means of the results reported for each year reflect the general level of satisfaction and the clear upward and improving trend.

In 2010, we started to use peer instruction (Mazur, 1997). We used two peer instruction questions per (2-hour) lecture, with questions that explored the concept under study and aligned with the final assessment. We carefully storyboarded the activity for ourselves and the students via PowerPoint, with prompts such as “Vote now”, “Discuss (3 minutes)” or “Vote again”. We embedded a short music clip into the PowerPoint to play during the discussion element at a low volume. We envisaged that this would ease the students into discussion, but it also provided a non-verbal cue for students to draw their discussion to a close.

We kept the result of the initial poll hidden to avoid biasing the small-group discussion before re-polling the same question. At the end of the second vote, the results were then displayed and feedback was given to the whole class by going through the aggregated responses displayed. Students would be keen to have the correct answer confirmed, which would lead to further class-wide discussion. As an extra ‘fun’ activity we also gave them the opportunity to explore any shift of opinion between the pre-discussion and post-discussion poll. This sometimes gave surprising results with a shift towards or even away from the correct answer, providing an opportunity for the class to examine their learning at a metacognitive level and leading the lecture in interesting ways. Practicing peer instruction in such a large cohort presented obvious challenges, such as the need to restore quiet once the class became overexcited, but this was definitely outweighed by the enhancement the method brought to the student’s learning experience.

Through our evaluation, students responded positively to the PI approach as providing an enjoyable social aspect to their studies. Although we started out in 2011/12 by measuring student perception towards learning benefits of the discussion tasks, it became clear that students actually aligned the value of the discussion opportunities more towards enjoyment of the studies (Tables 3 and 4). For this reason, the question on pedagogical benefits ceased after the first semester in 2013 /2014 to concentrate on the social benefits to students (see Table 4). Not illustrated within these results is the development opportunity this provided, by allowing us to gain a better understanding about question writing. Mazur (2009) describes a process of splitting opinion, getting students to commit to a defendable answer, and thereby setting the scene for engaged discussion and thought. Our experience shows that questions which have a majority of correct responses are of limited value for activating discussion amongst students.

Table 3 Survey results from students exposed to PI techniques – Pedagogical Benefits

Table 4 Survey results from students exposed to PI techniques – Social Benefits

From the results in Table 3 we can see that the students held a mainly positive view of the pedagogical benefits of peer instruction. Those that agree with the statement range from 65% in 2012/13 up to 79% in 2013/14. An interesting observation from these data is that despite more practiced use of this teaching methodology, the results do not show a clear upwards trend. This may have been because there was not always an apparent shift towards the correct answer following discussion, a phenomenon we consider a result of our selection of suitable questions for this activity, and we would also question whether a correct answer is as fruitful as a thought provoking experience. As previously highlighted, the realisation of social benefits and personal enjoyment being of more relevance to our project led to a refocus of the question, and Table 4 reports this from 2012 onwards. In 2012/13 the percentage of students that found PI enjoyable was measured at 84 % and in 2013/14 77%. The social benefits and enjoyment derived from PI are clearly positive and one would assume that making class more enjoyable and rewarding is improving the student experience, the means in Table 3 and 4 reflecting this.

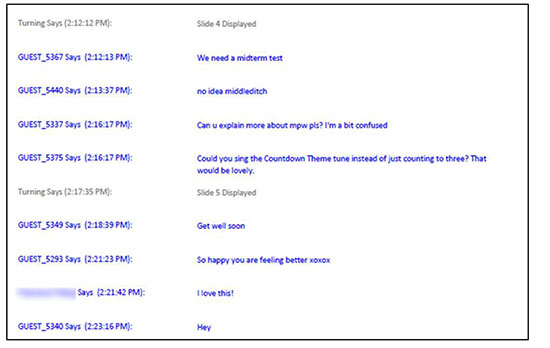

A further innovation to our practice occurred when we invited dialogue through new mobile-friendly communication tools. A background to this was our utilisation of an anonymous commenting system built into the TurningPoint ResponseWare application. Alongside polling data, free-text feedback from students can also be collected by the system and analysed after the session by the tutor through generation of an Excel report. Comments are handily grouped by slide (see Figure 1). A major benefit of the communication channel provided by ResponseWare is that it provides a level of anonymity for the student, so that they are not deterred from asking/communicating questions, and in this way we speculatively invited students to use this feature.

Students began by using it for cases where they had difficulty understanding certain concepts well enough from the lecture. They also found their own way of using the system: favourable comments for us, practical requests such as for the lecturer to speak up, and even suggestions upon innovation. For example, it was their suggestion to use audio clips to provide sounds cues to signify end of discussion or polling; see Figure 1. By acknowledging and responding to these messages, we found that this had a multiplier effect, encouraging further participation in this way by students. As instructors, we also found this a stimulating and engaging experience where students were active collaborators in teaching innovation.

Figure 1 An example of student comments via TurningPoint on ECON10042

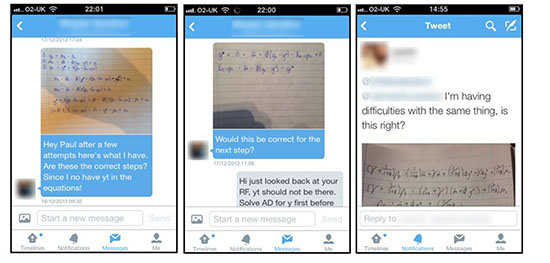

Encouraged by the increased dialogue and partnership, we started to make tentative steps towards using another form of communication in semester 1 2013/14, through the social media platform Twitter. Previous studies have found that where students are encouraged to use social media as part of the course, deeper learning can be achieved; see (Graham, 2014; Junco et al., 2011; Kassens-Noor, 2012) for a detailed discussion on the use of Twitter as a platform to enhance the learning environment. As an icebreaker for first year students we held a competition to suggest and then vote for a course hashtag (using the CRS). Students were then encouraged to use this hashtag on Twitter for all course communication such as queries about the course and the material. They were also encouraged to collaborate and request support from the teaching assistants. Again students shaped how they wanted to utilise this tool, quickly discovering the effectiveness of attaching photos of technical material to receive feedback from the convenor. An important aspect is that we advised students that they did not have to have a Twitter account to vicariously benefit from the interactions that go on there.

Figure 2 Student interaction via Twitter (ECON10042)

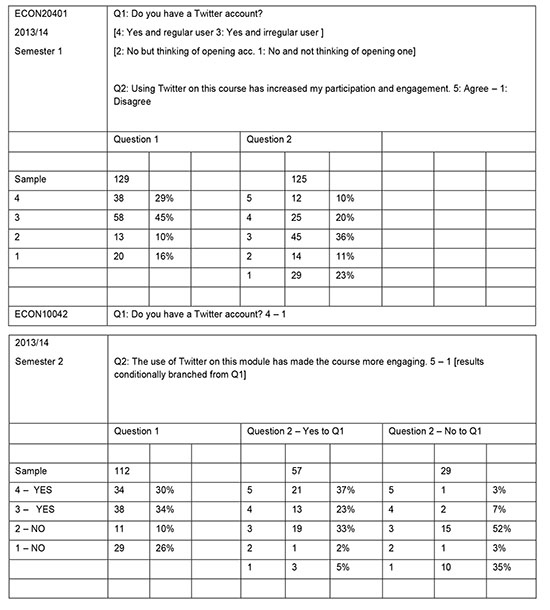

We experienced further benefits from a significant cut down in the number of lengthy emails received from students, who now saw a benefit in the immediacy and collaborative nature of Twitter. This benefit to the convenor was also compounded by a reduction in emails with sizeable attachments that are restrictive for mobile working and are often more suited to opening on a desktop. Student opinion on the use of Twitter was surveyed using the CRS from semester 1 2013/14. Firstly, students were asked whether or not they had Twitter accounts, and if they were planning to open an account in the future. Secondly, students were questioned on their perception of the tool as a vehicle to enhance engagement. For the second semester we linked the questions so that responses for the second question could be sliced against how question one was answered, seeming to provide more insight into the responses for question 1 alone. The results are presented below in Table 5.

Table 5 Survey results from students who had use of Twitter as a course communication tool

An observation from the above data was that Twitter had a higher saturation rate amongst second year students. To increase the reliability, further analysis of cohorts would be required, but from this data we were challenged in our perception that each new intake of students is more technologically equipped than the preceding year (e.g. in view of the increasing take up of mobile voting over clickers use in Table 1). One possible explanation is that there is a section of students who are swayed into adopting a new technology when they see it being used whilst at university, and so the saturation rate increases with the time spent in higher education.

In semester 1 the results indicate that 30% of all students polled agreed that Twitter increased their participation and engagement on the course, but that this included those students who did not have Twitter accounts. With this in mind, we decided to concentrate on student engagement and also to better measure the effectiveness of Twitter, conditionally branching the responses for semester 2. The response to question 2 shows an improvement from 30% to 60% for students with Twitter accounts who agree that Twitter made their course more engaging and a large decrease in the number of those who disagree. Whilst this seems to suggest that ownership of a Twitter account is key to increased student engagement using this tool, the significant proportion that voted ‘neither agree nor disagree’ to this question is likely because, at the time of surveying, students had not seen the use of this tool within the revision period, which has tended to be fruitful for the kind of collaborative learning typified in Figure 2.

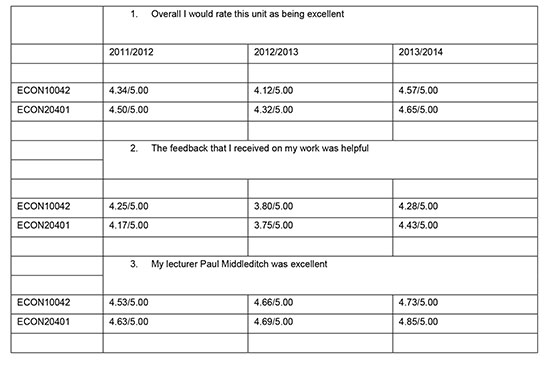

Another way to view the effects of the innovations introduced to our courses is through the cumulative evidence collected over the three years on the online evaluation questionnaires. The students accessed these questionnaires through the online virtual learning environment Blackboard. Students were asked for a response between ‘agree’, ‘mostly agree’, ‘neither agree nor disagree’, ‘mostly disagree’ and ‘disagree’. ‘Agree’ scored 5 and ‘disagree’ scored 0, thus the averages are out of 5.00.

Table 6 Survey results from online evaluation questionnaires over the three years

The results shown in Table 6 are for both core undergraduate courses in Macroeconomics over the three-year period 2011/2014. Whilst the scores increased across both questions 1 and 2 on student perception and feedback over the three-year period, this is not the case for the year 2012/2013. The dip in scores for both these questions in the middle year show that even though improvements can be made on large cohort courses systematically, other factors may have a significant negative impact. One-off problems with timetabling, library resource provision, IT services and quality of teaching assistants can all make a disproportionate impact on the evaluations. These fluctuations might also be driven by white noise effects, although the cause was not separately identified. Despite this, we can see that for the question rating the lecturer’s ability to convene successfully is not affected by such factors. The improvements introduced over the three years are consistently reflected in the outcomes, with scores rising from 4.53 to 4.73 on Macro Principles (ECON10042) and from 4.63 to 4.85 on the Macroeconomics IIA (ECON20401). Something not shown here is the open comments in the online evaluation questionnaire, which were overwhelmingly positive on the innovations and technologies used during the taught courses.

This paper has discussed the approaches taken on two large cohort economics courses aimed at meeting the various challenges presented by large group teaching, the introduction of student fees, the national student survey and the fast pace of technological change. Our project began in 2009 with the use of a classroom voting system for rudimentary student concept testing and moved on to make use of techniques such as peer instruction and social media as tools for pedagogical practice. From 2011, we began collecting data in an effort to measure the success of the changes made to the students’ learning environment and also to guide us on future moves. We have seen that classroom interaction can provide an unambiguous improvement to student satisfaction, that peer instruction can lead to greater enjoyment of face-to-face teaching and that the use of social media can also break down the barriers to higher student engagement. Furthermore, a surprising outcome of this project was the success possible through collaboration between an academic, mindful of the pedagogical needs of the students and a learning technologist mindful of the fast moving world and availability of new technology.

Extending and opening dialogue with students has led to the biggest leaps in terms of our own development towards a largely student-led and student-focused teaching approach. We have found that utilising communication tools that students find easy to use and in sync with their lifestyles is optimal. We have also found that providing students with their own creative licence to develop their own strategies for using these tools provides a rich-seam of inspiration for teaching innovation and enhancement, and also captivated student engagement inside and outside of the classroom. This supports the view of growth in popularity of student-controlled tools as highlighted by Walker et al. (2013).

More challenges face this sector as technology continues to advance at a rapid pace and we see the popularity of ‘online’ education grow exponentially and in new ways. So it would seem that it is more important than ever that face-to-face teaching is both relevant for the student and has added value over an online experience. We suggest that following a development pathway such as the one which we have undertaken can be a progression for traditional lecturers towards teaching that is more dialogical and in key with learners but at the same time compatible with the lecture format. When changing the traditional structure, normally in the form of lectures and tutorials, we would recommend that one should keep enjoyment of courses as the ultimate priority. We have used the tools of interaction, peer instruction and communication to achieve this and increased our own enjoyment in the process.

Paul Middleditch is a Lecturer in Macroeconomics at the University of Manchester, Associate Member of the Economics Network and Fellow of the Higher Education Academy (FHEA). His research interests include monetary policy, macroeconomic time series and also innovation in the area of pedagogy, particularly the use of technology in lectures to foster a more interactive learning environment.

http://staffprofiles.humanities.manchester.ac.uk/Profile.aspx?Id=paul.middleditch

Will Moindrot is an Educational Technologist at the Liverpool School of Tropical Medicine. He has worked within the field of technology enhanced learning for ten years, previously working for City University London and the University of Manchester. His research interests include classroom interaction tools, media technologies, and the effect of these as a change agent to support professional development in higher education roles.

http://uk.linkedin.com/in/willmoindrot