Aaron Mac Raighne, Dublin Institute of Technology Morag M. Casey, University of Glasgow Robert Howard, Dublin Institute of Technology Barry Ryan, Dublin Institute of Technology

It has been described clearly in the literature that many science students complete undergraduate physics courses without a strong understanding of the concepts that are being taught (Hake, 1998; McDermott, 1991). Students may develop the ability to solve complex problems and pass exams successfully while lacking a strong conceptual understanding of the topics in hand.

In response to this issue, a number of reforms are sweeping across science classes in Universities. Problem-Based Learning (PBL) can replace traditional lectures with problem-solving and peer-instruction exercises wherein students are presented with a problem, working in peer groups to research and discuss concepts leading to a solution (Edgar, 2013). Greater conceptual understanding of the required principles is typical with this method of peer instruction when compared to teaching with traditional lecture formats (Sahin, 2010).

It is also possible to implement peer instruction within large classes using student response systems or ‘clickers’. In this method, students are presented with a question and discuss with their peers differences in their answers such that a resolution may be reached, the intention being that everyone reaches the correct answer via peer instruction rather than being told it by the lecturer. Proponents of this method report an increase in students’ conceptual understanding, problem solving skills and engagement levels (Crouch & Mazur, 2001; Deslauriers, Schelew, & Wieman, 2011). In addition, online tools have been developed to accommodate learning outside the classroom. An increase in student learning and engagement has been reported in multimedia enhanced modules wherein pre-class material is presented and assessed online in the ‘flipped classroom’ approach (Chen, Stelzer, & Gladding, 2010; Sadaghiani, 2012; Seery & Donnelly, 2012).

The majority of the educational reforms mentioned include methods which increase the level of student participation and allow for peer instruction. These techniques, although proven invaluable to the students’ learning experience, require a large time investment from instructors with cycles of analysis, design, development and evaluation. Often this work needs to be done along with the modifications of course documentation. On short timeframes, with increasing teaching loads and a limit to resources, implementation of these reforms can often be a daunting task for lecturers. Furthermore, many of the tools required, e.g. student response systems or software for development of multi-media components, are expensive and often not easily available.

PeerWise is an online web tool which can be used to provide resource-low, easily implementable active-learning, peer-instruction platform within teaching modules (Denny, Luxton-Reilly, & Hamer, 2008). Students can submit MCQs, model answers and explanations to their module-specific website. In addition, they can answer questions set by other students and rate and discuss questions. Within large-class groups, particularly junior undergraduate university classes, this approach works well with students creating their own repository of module-specific questions. PeerWise has been evaluated in many educational settings, particularly in tertiary education. The literature contains many examples of the implementation of PeerWise with strong correlations shown between activity within PeerWise and an increase in students’ exam marks (Bates et al., 2012; Casey et al., 2014; Hardy et al., 2014). In this study, the students’ attitudes towards PeerWise across a large and varied student cohort are probed. An analysis of students’ use of and attitudes to PeerWise is compared to the literature to investigate whether the students recognise the benefits of PeerWise as a useful additional active-learning and peer-instruction tool.

In our study, we wished to investigate whether students' responses to, and perceptions of, the peer-instruction environment were aligned with those reported in the literature. To that end, we exposed a large and varied student cohort of undergraduate students to PeerWise, administering anonymous questionnaires at the end of the exercise in order to record students' response. Likert-style questions and thematic analysis of free-text responses from students revealed that, across these varied cohorts, the vast majority of students were able to recognise and articulate the same benefits of a peer-instruction environment as found in the literature.

The perception by some students that others in the class flooded the MCQ repositories with easy or copied questions was identified as a common theme across all cohorts. Conclusions drawn from this thematic analysis of student responses to their peer-instruction experiences allowed us to develop a set of ‘best practice’ recommendations for future implementation of the software.

PeerWise was integrated into modules across a wide and varied student cohort. It was implemented in a similar fashion in a number of different classes in the School of Physics (SoP) and the School of Food Science and Environmental Health (SoFSEH) in the Dublin Institute of Technology (DIT) along with the School of Physics and Astronomy (SoPA) in the University of Glasgow (UoG). Within these modules, there was a spread of years, science subjects and academic levels as shown in Table 1. Levels 6, 7 and 8 are defined by the Irish National Framework of Qualifications (National Framework of Qualifications – Homepage) as advanced/higher certificate (level 6), ordinary bachelor degree (level 7) and honours bachelor degree or higher diploma (level 8).

Table 1 Listings of the different class groups involved in study

|

Group # |

Institute/ School |

Year/ Level |

Module description |

Active Students |

|

1 |

DIT/SoP |

1/8 |

Introductory physics for non-physics degree courses |

104 |

|

2 |

DIT/SoP |

1/8 |

Introductory physics for physics degree courses |

47 |

|

3 |

DIT/SoFSEH |

1/8 & 6 |

Foundation organic chemistry |

141 |

|

4 |

DIT/SoP |

1/7 |

Fundamental physics |

78 |

|

5 |

UoG/SoPA |

2/8 |

2nd year general physics |

139 |

The delivery of each module varied depending on subject and institute; however, each module was delivered as a mix of theoretical lectures, practical based labs and a concurrent period of self-study to supplement class and lab time learning. In total, PeerWise was introduced into five modules (N=509). All lecturers involved in the delivery of the theoretical components of the modules implemented the PeerWise integration in a similar fashion. Initially, identical introductory and scaffolding materials were provided to the students and a lecturer-facilitated workshop allowed students to become familiar with the concept of peer-generated MCQs following an approach similar to that laid out in a previous study (Casey et al., 2014). Workshop exercises focused on the pedagogy and rationale of PeerWise use rather than the mechanics of the PeerWise software. Exercises highlighted methods of writing good MCQs in addition to incorporating distractors and common student mistakes into the possible answers. Examples of previous good PeerWise questions illustrated the potential to be creative, have fun and use authoring of questions as a learning exercise. Examples of the scaffolding material can be found online (Casey et al., 2014; Denny, n.d.). Anonymity within PeerWise was highlighted, and the fact that PeerWise was the students’ learning space was emphasised in order to encourage students to be creative and to allow themselves, and others, to be comfortable in making mistakes within the PeerWise environment.

To encourage student engagement with the new teaching approach, a small percentage of the overall module grade was allocated for PeerWise engagement. Across the different modules, the marks associated with PeerWise were in the region of 2–6% of the overall module grade. This percentage was not based on students answering questions correctly, but on engagement with the task and their peers online. Students gain a PeerWise Score (PWS) by engaging with PeerWise. The more the students interacted and engaged with PeerWise, the higher their PWS. This was incorporated into the marking scheme as shown in Table 2. This assessment scheme was decided upon to allow students to pass based on the minimum engagement (author, answer and comment on four questions); however, it encouraged students to engage beyond the minimum and nurtured competition within the class for the engaged students.

Table 2 Scoring system in use for this implementation of PeerWise

|

Description |

Score (%) |

|

Write, comment on and answer fewer than 2 questions |

0 |

|

Write, comment on and answer more than 2 questions but fewer than 4 |

20 |

|

Write, comment on and answer 4 questions and get a PWS less than the class average |

40 |

|

Write, comment on and answer 4 questions and get a PWS greater than the class average |

70 |

|

Write, comment on and answer 4 questions and get a PWS in the top ten students |

100 |

Data were collected using the PeerWise activity logs for each module and a questionnaire. The questionnaire was designed to provide insight into the students’ use of PeerWise and to probe their attitudes towards the software. It contained eight Likert-scale type questions (N=356 responses) and four free-text questions (N=311 responses).

Thematic analysis on the free-text data was facilitated by entering the data into QSR NVivo 10. They were then subject to a close reading. This was followed by open thematic coding. As the responses were very short and concise, typically one to three lines, themes were very easily identified. Further examination led to categorising of the major themes and sub-themes. In this report, we focus on the major themes and sub-themes as reported by the largest number of references. Co-authors independently agreed with themes as documented. Quotes are displayed when they either illustrate the theme concisely and/or are typical of the student response categorised in that theme.

This study was carried out at two higher-level institutions, focusing on two subjects. Additional studies can be carried out to investigate the applicability of this approach in other education settings, levels and subjects. The researchers were also the lecturers involved in delivering the theoretical elements of this module. Pedagogical evaluation data were collected anonymously; however, student and participating researcher bias cannot be totally discounted.

As reported by many previous studies (Bates et al., 2012; Casey et al., 2014; Hardy et al., 2014), overall the students engaged highly with PeerWise and contributed far more than was expected. For 509 students the minimum requirement would be 2,036 (509x4) questions authored, answered and commented on. The combined total contributions from all students were 174% of the minimum required questions, 2,400% of the minimum answer requirements and 601% of the minimum comment requirements. However, this simple analysis does not account for the different student behaviours.

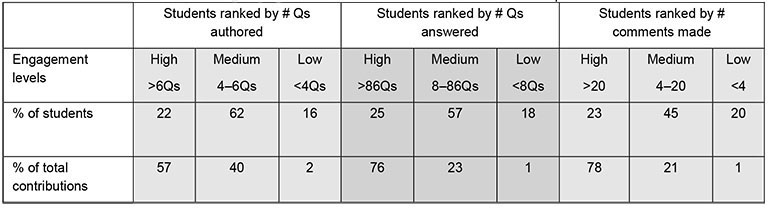

Students across the class groups tended to fall broadly into three categories: the highly engaged (22–25% of students), the engaged (45–60% of students) and the low engagement group (16–20% of students). The broad categories are defined by the number of contributions made as shown in Table 3. To explain the differences in engagement, we can look at the number of answers submitted (centre section, Table 3). The highly engaged students, who accounted for only 25% of students, submitted 76% of the total answers. The engaged group, 57% of the students, contributed only 23% of the total answers, while the low engagement group, those that answered fewer than eight questions, submitted only 1% of the answers. Very similar trends are seen if the students are ranked by either questions answered or authored or comments made as shown in Table 3. This illustrates that although there is high engagement within the class, the total reported numbers of contributions tend to disguise the fact that a small number of the students are doing the majority of the work. Analysis of the motivation for students contributing more than the minimum requirements is found in the free-text response section.

Table 3 Different engagement levels of the three main aspects of student engagement with PeerWise and the percentage contribution of each grouping to the total number of contributions for that aspect.

The data make clear that students contributed most in terms of answers. This may not be surprising, as authoring questions and providing feedback requires a higher level of cognitive effort than answering questions (Denny et al., 2008). However, answering many MCQs is very useful to students and acts as a form of retrieval-based learning. Repeated testing, as is the case with answering MCQs, has been shown to produce greater retention and more meaningful learning than repeated reading/studying (Karpicke, 2012).

The average student responses and the Likert-type questions asked are shown in Table 4. The responses of the students to the first three questions illustrate that the students seem to find writing and answering questions more useful than engaging in discussion. The distribution of the answers on the Likert scale across all of the modules was very similar for these first three questions. However, differences between class groups appear within the fourth question. Here we attempted to probe the amount of plagiarism that we suspected was occurring and found that the students were indeed plagiarising. In the classes with lower level students, class groups 3 and 4 from Table 1, more students tend towards ‘copying and pasting’ questions, while classes with higher level students only, level 8, appear more inclined to develop their own questions. Group 5, the only second-year level 8 students, are the only group not to admit to any ‘copying and pasting’ of questions. The issue of plagiarism is discussed again in the free-text section.

Students agreed that they did (or would) use PeerWise for revision. In modules where exams occurred during the PeerWise assessment period, peaks in activity can be seen which coincide with exam dates. This activity correlates well with the students’ answers. However, after the PeerWise assessment date but before the end-of-module exams very little, if any, activity was registered on PeerWise across all modules. The usage data not only contradicted previous reports (Denny et al., 2008) but also seemed to contradict the students’ answer that PeerWise is a useful revision tool. However, further light is shone on this in the free-text answers discussed in the next section.

On the whole, students did not seem to access PeerWise primarily on their mobile device, but a large number still did – approximately 80 students. Some students mentioned in the free-text responses that the site is not mobile friendly and they would recommend a PeerWise site designed for the mobile platform to accompany the main site.

When asked if the students would like to see PeerWise introduced in other modules, the spread of answers for the different class groups varied. The classes with lower level students, groups 3 and 4 (Table 1) agreed/strongly agreed, while the higher academic level students – the second-year level 8 students, group 5 – disagreed/strongly disagreed. The first-year level 8 student groups which sits, in academic levels, between the two opposing groups responded neutral.

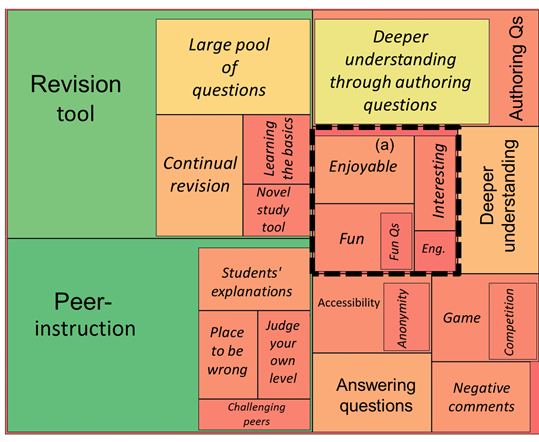

The themes arising from analysis of this question are shown in Figure 1. The area covered relates to the number of references coded with sub-themes illustrated in italics. Here will we discuss only the major themes or benefits as viewed by the students: ‘Revision Tool’, ‘Peer Instruction’ and ‘Authoring Questions’.

Figure 1 Categories of the benefits/themes of PeerWise as reported by the students. The area of each section represents the number of coded references of that theme. Sub-themes are shown in italics, (a) represents the ‘General Positive comments’ theme, and the outline is shown by the dashed box. Eng. represents the sub-theme ‘engaging’.

References to PeerWise as a revision tool occur in approximately a third of all responses. Initially, this would appear contradictory to the evidence presented from the PeerWise usage data discussed earlier in this report. That data indicated students did not use PeerWise in the dates before the end of module exam, after the PeerWise assessment date. However, it is clear from the student responses that many of the students were using PeerWise as a form of continual revision throughout the module. Reponses such as these quoted below indicate that PeerWise was being used by the students to reflect on material delivered in class in a timely fashion and not used for ‘cramming’ in the time before the module exams: “Acts as a homework type task to reinforce work learned in class”; “..it keeps you on top of the subject, useful for studying”; “Forcing me to sit down and study and pull out my physics notes”; “It was a good way to stay continuously engaged with the subject..”; “It gets you to think about the topics covered in class and look over them”.

The tool is being used for continual revision throughout the module delivery. Research is ongoing as to the optimal conditions for maximum retention (Cepeda, Vul, Rohrer, Wixted, & Pashler, 2008), but broad agreement over the field of research is given to the fact that spaced (or continual) revision can dramatically enhance information retention (Cepeda, Pashler, Vul, Wixted, & Rohrer, 2006).

Other sub-themes were evident within the Revision Tool theme. Largest amongst these was the benefit of a large pool of questions created by the students themselves. A large pool of questions can help with retrieval-based learning, which can improve information retention and meaningful learning (Karpicke, 2012). Creating a large pool of questions would be extremely time-consuming for instructors, and it was one of the design goals of PeerWise to have the students create the repository for themselves (Denny et al., 2008). Additionally, students noted the benefit of a novel and additional tool to aid their study: “It helps bring a different aspect to the revision process, as well as providing tons more practice questions than lecture notes”.

The second-most-noted major theme, as shown in Figure 1, is the benefit of peer instruction. Students mentioned the large number of people with which they can discuss their understanding of questions. Many also made references to the language used. ‘Simple language’ is used or language different to that of the lecturers’. The benefit of ‘accessible’ language, the language of the student over the instructor, has been reported to be one of the advantages of peer-instruction (Crouch & Mazur, 2001; Nicol & Boyle, 2003). Furthermore, students mentioned the benefit of having the same topic discussed a number of different times and that all the different explanations combined to give them a greater understanding. Examples of these comments are: “can engage with others if you don’t understand something. You have many people that will help you”; “getting explanations to some questions from other students as they were explaining it in easy language”.

Another evident sub-theme within the peer instruction theme arose where students perceive that PeerWise allowed them a place where they could comfortably make mistakes and learn from these mistakes. “Because it was anonymous you didn't feel embarrassed answering questions and getting them wrong, I felt I learnt more because there wasn't a constant pressure to be right”.

The students recognised the opportunity PeerWise afforded them to judge the level of their fellow classmates and their position within the group. Students, in studies in peer-assessment, have perceived similar benefits of viewing the work of peers and identifying good practice (Davies, 2000). It has been reported that opportunities for self-evaluation (Kitsantas, Robert, & Doster, 2004), such as those provided by PeerWise, can have positive effects on student’s motivation and learning. In addition, it has been argued that reading and contributing feedback allows the students to develop the skills to judge their own work (Nicol, 2011a), which assists in creating self-directed learners and underpins many graduate attributes (Nicol, 2011b). A similar theme to students judging their own level arose were students reported PeerWise as a place to showcase their knowledge/aptitude and that many students enjoyed challenging their peers: “I enjoyed writing questions that people would need to revise for to answer and to read their feedback”.

A third major theme, as shown by Figure 1, is the benefit associated with authoring questions. The idea that ‘to teach a topic to someone one must master the topic’ is often included when discussing PeerWise and peer-instruction. The students perceive that through authoring questions they really had to struggle with their understanding of the topic and that this was one of the major benefits of PeerWise.

Being able to explain a question to a point that they understand cements your own knowledge. Put it in your own language therefore not just learning something off by heart.

Authoring questions, particularly multiple-choice questions, can be a challenging process for undergraduates. Multiple-choice questions within PeerWise require a question, the correct answer, several perceived correct answers (‘distractors’) and feedback. This process is challenging, as the author must correctly understand the concept, and the known associated problem areas, in order to write a good question with strong distractors. Despite these perceived difficulties, the standard of PeerWise questions authored by students has recently been demonstrated as being high (Bates, Galloway, Riise, & Homer, 2014). Errors noted in PeerWise repositories were addressed by the community, and the peers within the community were effective at rating the questions written by their peers (Denny, Luxton-Reilly, & Simon, 2009).

Three major themes emerged as the biggest problems with PeerWise as noted by the students, ‘Recurring/Easy Questions’, ‘Silly Questions/Clowning’ and ‘Peer Instruction’. These themes along with sub-themes, in italics, and other minor themes are shown in Figure 2.

Figure 2 Categories of the problems/themes of PeerWise as reported by the students. The area of each section represents the number of coded references of that theme. Sub-themes are shown in italics, (a) represents the ‘Peer-instruction’ theme and the outline is shown by the dashed box.

The students perceived the system as flooded with easy and repetitive questions. A two-pronged reason for this is explained by the students. Firstly, it requires much more effort to create harder questions, and the students do not believe that they are awarded with sufficient PWSs (and hence potential assessment marks); creating numerous easy questions was perceived to be awarded with a higher PWS than authoring a single hard question. Secondly, the students reported attempting easier questions when answering questions, as the harder questions require more time and effort and result in a lower PWS for the time invested. “People tend to answer really easy/trivial questions and don't pay attention to original, time consuming ones”;

The thing is, people that wrote simple questions, such as definitions, got lots of reputation because lots of people answered the simple ones. The trickier ones get answered less and people down rate them when they get them wrong so people writing easy questions and copying out of textbook got better marks than those who put a lot of work in. The system is flawed.

This type of ‘tactical’ student behaviour has previously been reported by Bates et al. (2014), with easier questions answered approximately twice as much as harder questions, as ranked by Bloom’s taxonomy scale. Bates and co-workers (2014) noted that a large majority of the questions submitted to PeerWise in their study were of high standard. Typically, questions required students to apply rather than recall knowledge. These results contrast with our study, where the students reported a low standard of question. This may be due to the number of questions required as the minimum criteria for the assessment; only one question was required by Bates et al. (2014), whereas we required four questions.

The second major theme, as shown in Figure 2, is that of students creating silly questions or clowning. This was highlighted by many students but it was predominantly in the largest class grouping, group 3: “Irrelevant questions or people not taking it seriously”.

As this is found almost entirely in a single class group, it may indicate that a small group of students can act as a seed for this behaviour and that more students follow suit. Perhaps this is something that can be discussed by instructors when introducing PeerWise. Students can be told they should flag these types of questions and course staff may be able to stop the spread of this behaviour.

The theme named ‘Peer Instruction’ is typical of students’ fears when dealing with peer instruction. The students see it as a problem that they are not being taught by the experts but by each other. Students highlighted that mistakes are being made by fellow students, that some students do not furnish good explanations to questions or that they are unsure of their fellow classmates’ expertise. Students doubting the knowledge and expertise of fellow students is reported in peer assessment (Davies, 2000), near-peer teaching (Bulte, Betts, Garner, & Durning, 2007) and in peer-presentations and role playing (Stevenson & Sander, 2002). It is to be expected that similar concerns would be noted in the use of an online peer-instruction tool. However, a study of questions submitted to PeerWise in a computer science course (Denny et al., 2009) found that 89% of questions created by students were correct, and those that were incorrect were corrected by students within the PeerWise system. A larger study of biochemistry students using PeerWise (Bottomley & Denny, 2011) found 90% of questions to be correct, with half of the incorrect questions recognised by students within PeerWise. In view of these studies, the students’ fears reported here could be addressed by stressing the responsibility of the students to monitor and regulate their own learning environment, correcting their fellow students when needed: “..did not trust the answers (relies on people who are not qualified. If you don't trust their explanation no way of knowing if you are right)”; “Authors making poor questions with incorrect answers or lack of explanations”; “the answers to the questions are not verified by anyone other than the students – risky”; “The answers to some of the questions were wrong”.

Themes which were mentioned approximately half as often as the major themes, as seen in Figure 2, are ‘More Lecturer Involvement’, the ‘Grading System’ and the ‘Comments Section’. The first themes were found predominantly in single class groups. More lecturer involvement was asked for by group 2, where the lecturer did not engage with PeerWise outside of the introductory session. Students reported forgetting about PeerWise, and a few students asked that it be integrated into classwork as a reminder. Many of the students in group 5 found the grading system unfair. This group contained some of the highest academic achieving students in the study, who may be motivated by grades. Finally, a similar portion of the students across most of the class groups found that the requirement to write four comments meant a plethora of meaningless comments were contributed: “The comments, having to write 4 comments only led to a lot of "good question" and nothing of use”.

A small percentage, about five percent, of those that answered the free-text questions reported inappropriate student behaviour. This included plagiarism, rude or mean comments and unfair ratings of questions in an attempt to ‘rig’ or ‘cheat’ the PeerWise scoring system. Although only a few students felt this to be an issue, it is mentioned here as the authors feel it is of a serious nature. Students can be asked to flag this material, and the instructors can then intervene. As reported by the students in the Likert-type questions, many students are involved in plagiarism. It is clear from the low numbers of responses to this free-text optional section of the questionnaire that the vast majority do not feel strongly about this. Perhaps the students feel that if fellow classmates find a good question in some other text and copy it, at least they are contributing a valid question to the repository. However, further investigation would be needed to support this claim.

Students’ answers were straightforward to categorise for this question due to the mostly very short, many one-word answers to this question. A large majority revealed that the main reason given for engagement beyond the minimum requirement was revision purposes, as shown in Figure 3. The next biggest category was students motivated by grades. A smaller number of students enjoyed the competitive or game aspect of the software, e.g. collecting badges and scores. A small but significant number of students (~10% of respondents) claimed they contributed more as they enjoyed doing so. These answers illustrate the reasons why students contributed far more than the minimum requirement, as shown by the usage data and discussed in the Questionnaire section: “At first I had intended to just do the minimum but found that answering questions really helpful …. I also found myself hooked on trying to earn badges”.

Figure 3 Categories of the reasons students gave for contributing more than the minimum requirement.

In this study, we probed the attitudes of a wide cohort of students across disciplines and institutes. This was done with a questionnaire and using PeerWise activity data to cross-reference when necessary. Students were clearly able to recognise and articulate the benefits of PeerWise as a peer instruction tool and revision aid.

Likert-type questions reveal that the students agree there are benefits to writing, answering and (to a lesser extent) discussing questions with peers. They also agree that they would or did use PeerWise for revision and that they would like to see PeerWise introduced in other modules. Small differences in the different cohorts exist and appear to be between the highest and lowest academic level students (first year level 6/8 and second year level 8). Indeed, the students’ usage of PeerWise indicates that the majority of the students engage with PeerWise and contribute more than the minimum requirements and that a minority (20–25%) make a very large number of contributions.

Much greater insight is provided with the thematic analysis of the free-text responses. The students clearly articulate the benefits of PeerWise as a peer-instruction and revision tool. As a study tool, the students describe the large pool of questions for continual revision; this provides the students with a revision aid for retrieval-based and active learning. The benefits of PeerWise as a peer-instruction tool are typical of those in the literature, e.g. comments on the ‘simple’ language used by their fellow students.

Issues the students found with PeerWise are the flooding of the system with easy and/or repetitive questions and students not respecting the learning environment by posting silly questions. In addition, the traditional fears of students regarding peer instruction are present, i.e. not being instructed by experts.

The students perceive that PeerWise is working as designed, i.e. a peer-instruction, active-learning, revision tool. Many of the problems, as reported by the students, are not inherent problems of the tool itself. Many of these issues do not appear in other case studies on PeerWise and can be dealt with improved implementations of PeerWise.

It is recommended that:

1. Instructors check for flags that students have placed on questions for their attention.

2. Instructors may show examples of good questions in class when reminding students that PeerWise is a continual assessment tool with a sometimes distant deadline. This will help engage the students.

3. Care should be taken not to be seen to be too involved with PeerWise, as one of many of the foreseen advantages and student-reported advantages is that this is their own space.

4. Lecturers stress the importance of self-regulating the system. If there are mistakes on the system, it is the students’ responsibility to correct their classmates. In addition, if they see issues arise with bad behaviour (childish questions, bullying, plagiarism etc.) they should flag this.

Dr Mac Raighne is an Assistant Lecturer at the Dublin Institute of Technology and a recipient of a Teaching Fellowship and Learning and Teaching Award from the Dublin Institute of Technology. Corresponding author, aaron.macraighne@dit.ie. CREATE Research Group, School of Physics, Dublin Institute of Technology, Kevin Street, Dublin 2, Ireland

Dr Casey is a University Teacher at the University of Glasgow. She is also the Treasurer of the Higher Education Group in the Institute of Physics, UK. Her interests are in the areas of student academic performance, peer-learning and retention in higher-education. School of Physics & Astronomy, University of Glasgow, Glasgow G128QQ, U.K.

Dr Robert Howard is a lecturer at the Dublin Institute of Technology. His interests are in the areas of problem-based learning, peer instruction and physics education research. CREATE Research Group, School of Physics, Dublin Institute of Technology, Kevin Street, Dublin 2, Ireland.

Dr Ryan is an applied biochemist whose pedagogic research focuses on the integration of novel technology into the teaching and learning environment and the effect of assessment, feedback and blended learning on undergraduate learning. School of Food Science and Environmental Health, Dublin Institute of Technology, Cathal Brugha St., Dublin 1, Ireland.

This work was supported by a Teaching Fellowship granted by the Learning, Teaching and Technology Centre in the Dublin Institute of Technology.